from admiralpatrick@lemmy.world to fediverse@lemmy.world on 22 Aug 2025 19:58

https://lemmy.world/post/34832383

“Antiyanks” is back at it again and has switched tactics to spamming a massive number of comments in a short period of time. In addition to being annoying (and sad and pathetic), it’s having a deleterious effect on performance and drowns out any discussions happening in those posts. That spam also federates as well as the eventual removals, so it’s not limited to just the posts being targeted.

Looking at the site config for the home instance of the latest two three alts, the rate limits were all 99999999. 🤦♂️

Rate limits are a bit confusing, but they mean: X number of requests per Y seconds per IP address.

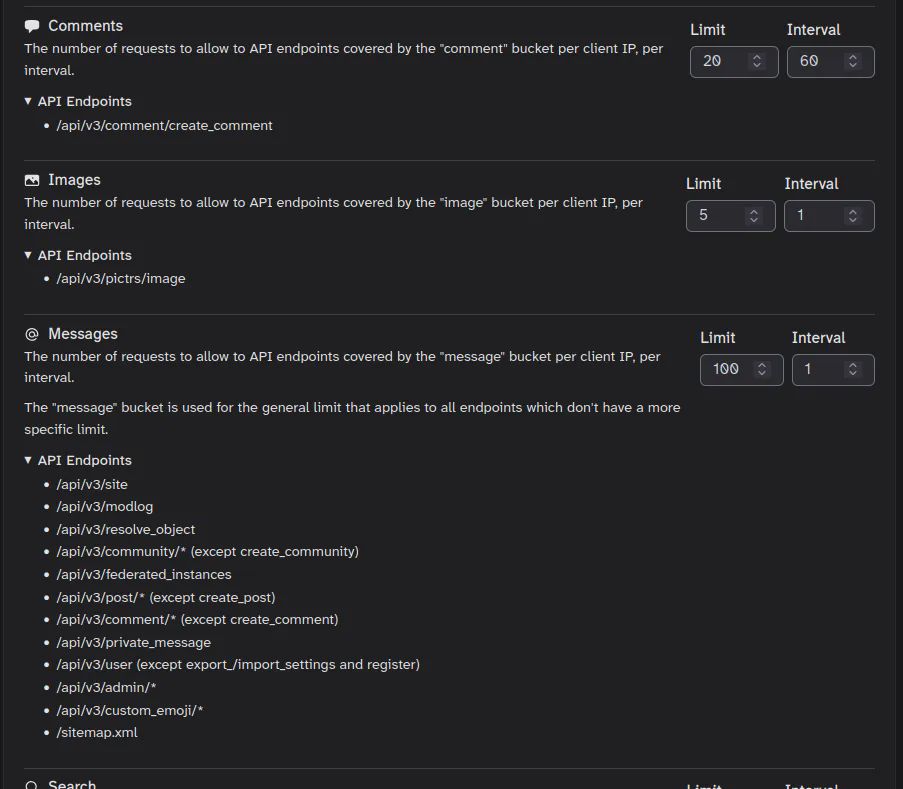

The comment API endpoint has its own, dedicated bucket. I don’t recall the defaults, but they’re probably higher than you need unless you’re catering to VPN users who would share an IP.

Assuming your server config is correctly passing the client IP via the XFF header, 20 calls to the /create_comment endpoint per minute (60 seconds) per client IP should be sufficient for most cases, though feel free to adjust to your specific requirements.

Edit: A couple of instances accidentally set the “Messages” bucket too low. That bucket is a bit of a catch-all for API endpoints that don’t fit a more specific bucket. You’ll want to leave that one relatively high compared to the rest. It’s named “Messages” but it covers far more than just DMs.

threaded - newest

I don’t operate a fed server, but just curious. Couldn’t/shouldn’t rate limits be on a per-user/per-session-token basis to avoid the vpn issue you mentioned?

You’ll have to talk to the lemmy devs about that. I’m a retired admin, but last I was aware, they’re based on client IP.

Isn't the lemmy main dev that tankie guy you guys hate? Rich coming from you asking labour from them lmao

<img alt="" src="https://lemmy.blahaj.zone/pictrs/image/31893ace-f6e1-475e-aa64-a1735f0cbbf1.webp">

I would hope you are not running a honeypot 👀

And that would solve issues with the reverse proxy not forwarding the ip correctly right?

I rarely block anyone and never a proper shitposter but holly shit I had to do like half dozen accounts today.

What is a shitposter?

An artisté

But you already knew that, good sir.

Wall decor of a glistening turd

mmmmmmmeeeeeeeeeeeeeeeeeee :3

.

You know.

Thanks for sharing

Thanks for the heads up. I don’t know what ‘Antiyanks’ is, but I already had to ban one comment spammer.

The rate limits are indeed a bit confusing. The settings are:

Rate Limit: X Per Second: Y

I understand this to be ‘X for every Y seconds’

So, a ‘Comments’ Rate limit: 10, Per second: 60, means a maximum of 10 comments per minute, correct?

Maybe the reason you see 99999999 is due to troubleshooting. I have increased my instance’s limits multiple times while troubleshooting server issues, because the meaning of the settings was not clear to me. These limits are usually not the reason for the sever issue, but I put some high number and did not bring them back down after the issues were resolved.

I have lowered them now to more reasonable numbers. I will also be more strict with new applications for the time being.

Correct, per client IP.

Could be. I try not to speculate on “why” when I don’t have access to the answer lol.

I don’t recall any of them being from mander (unless they were dealt with before I started testing?), but thanks for taking preventative measures :)

It’s the codename for a particular long-term troll and is based off of their original username pattern (which they still use sometimes). I have reason to believe it’s also the same troll that used to spam the racist stuff in Science Memes.

These are most of today’s batch (minus the JON333 which was just a garden-variety spammer that made it into the last screenshot).

<img alt="" src="https://lemmy.world/pictrs/image/250bce69-cde5-4af5-9572-4f1c825bd86b.webp">

<img alt="" src="https://lemmy.world/pictrs/image/c08121cf-18f5-4317-9a66-60f7debe4500.webp">

<img alt="" src="https://lemmy.world/pictrs/image/556d4a36-d726-4edb-9c22-fe06a2b27255.webp">

Setting the limits to more reasonable values, like ‘20 posts per minute’, causes the server to stop serving posts. My front page goes blank.

So, I am starting to think that ‘20 pots per minute’ means ‘requesting 20 posts per minute’ and not ‘creating 20 posts per minute’.

I am still having doubts about what these limits mean, but setting reasonable numbers seems to break things, unfortunately.

I replied to your other comment, but most likely cause is the API server not getting the correct client IP. If that’s not setup correctly, then it will think every request is from the reverse proxy’s IP and trigger the limit.

Unless they’re broken again. Rate limiting seems to break every few releases, but my instance was on 0.19.12 before I shut it down, and those values worked.

Thanks! Yes, I saw both messages and I am now going through the NGINX config and trying to understand what could be going on. To be honest, Lemmy is the hobby that taught me what a ‘reverse proxy’ and a ‘vps’ are. Answering a question such as ‘Are you sending the client IP in the X-Forwarded-For header?’ is probably straight forward for a professional but for me it involves quite a bit of learning 😅

At location /, my nginx config includes:

So, I think that the answer to your question is probably ‘yes’. If you did have these rate limits and they were stable, the more likely explanation is that something about my configuration is sub-optimal. I will look into it and continue learning, but I will need to keep my limits a bit high for the time being and stay alert.

Yeah, you are setting it, but that’s assuming the variable

$proxy_add_x_forwarded_forhas the correct IP. But the config itself is correct. Is Nginx directly receiving traffic from the clients, or is it behind another reverse proxy?Do you have a separate location block for

/apiby chance, and is theproxy_set_headerdirective set there, too? Unless I’m mistaken, location blocks don’t inherit that from the/location.Yes, I see this there. Most of the nginx config is from the ‘default’ nginx config in the Lemmy repo from a few years ago. My understanding is somewhat superficial - I don’t actually know where the variable ‘$proxy_add_x_forwarded_for’ gets populated, for example. I did not know that this contained the client’s IP.

# backend location ~ ^/(api|pictrs|feeds|nodeinfo|.well-known) { proxy_pass http://0.0.0.0:8536/; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; # Rate limit limit_req zone=mander_ratelimit burst=30000 nodelay; # Add IP forwarding headers proxy_set_header X-Real-IP $remote_addr; proxy_set_header Host $host; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; }I need to do some reading 😁

nginx.org/en/docs/…/ngx_http_proxy_module.html

$proxy_add_x_forwarded_foris a built-in variable that either adds to the existing X-Forwarded-For header, if present, or adds the XFF header with the value of the built-in$remote_ipvariable.The former case would be when Nginx is behind another reverse proxy, and the latter case when Nginx is exposed directly to the client.

Assuming this Nginx is exposed directly to the clients, maybe try changing the bottom section like this to use the

$remote_addrvalue for the XFF header. The commented one is just to make rolling back easier. Nginx will need to be reloaded after making the change, naturally.# Add IP forwarding headers proxy_set_header X-Real-IP $remote_addr; proxy_set_header Host $host; # proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-For $remote_addr;Thanks!

I was able to crash the instance for a few minutes, but I think I have a better idea of where the problem is. Ths $emote_addr variable seems to work just the same.

In the rate limit options there is a limit for ‘‘Message’’. Common sense tells me that this means ‘direct message’, but setting this to a low number is quite bad. While testing I eventually set it to ‘1 per minute’ and the instance became unresponsive until I modified the settings in the database manually. If I give a high number to this setting then I can adjust the other settings without problem.

“Message” bucket is kind of a general purpose bucket that covers a lot of different endpoints. I had to ask the lemmy devs what they were back when I was adding a config section in Tesseract for the rate limits.

These may be a little out of date, but I believe they’re still largely correct:

<img alt="" src="https://lemmy.world/pictrs/image/4dc757e7-f68d-4e68-bcc9-700d8fbe7dca.webp">

So, ultimately my problem was that I was trying to set all of the limits to what I thought were “reasonable” values simultaneously, and misunderstood what ‘Message’ meant, and so I ended up breaking things with my changes without the reason being obvious to me. I looked into the source code and I can see now that indeed ‘Messages’ refer to API calls and not direct messages, and that there is no ‘Direct Message’ rate limit.

If I let ‘Messages’ stay high I can adjust the other values to reasonable values and everything works fine.

Thanks a lot for your help!! I am surprised and happy it actually worked out and I understand a little more 😁

Awesome! Win-win.

😁 👍

Hi I think I set the messages too low as well and now no.lastname.nz is down, pointers on how to fix with no frontend?

If you have DB access, the values are in the

local_site_rate_limittable. You’ll probably have to restart Lemmy’s API container to pick up any changes if you edit the values in the DB.100 per second is what I had in my configuration, but you may bump that up to 250 or more if your instance is larger.

Thanks:

UPDATE local_site_rate_limit SET message = 999, message_per_second = 999 WHERE local_site_id = 1;Sorry, I went to sleep. Glad you were able to sort it out 😄

All good, taught me again not to rely on chatGPT. I even said I needed to find the right BD table and field and it lead me down a rabbit hole of editing config/env files

Fuck AI

Haha, yeah, trusting ChatGPT with how to manipulate the database and change config files is a risky move 😆 I did use it myself to remind me of the postgresql syntax to find and alter the field.

No, they were not in mander.xyz. But I am generally quite relaxed when it comes to accepting applications. I mostly reject an applicant if it is very clear it is not an actual user, and then actively follow up on recent accounts for a short time. So the possibility of silent spammer accounts accumulating over time is always a concern.

Good to know, wink wink

Have fun! 😁

Hmmm - after changing these settings to what I think are reasonable settings, the server crashed and I am now getting ‘Too many requests’ messages… So, perhaps there is something not working so well with these rate limits, or I am still misunderstanding their meaning.

Not sure. I had mine set to 20 per 60 for a long time without issue.

Most likely cause would be the Lemmy API service not getting the correct client IP and seeing all API requests come from the reverse proxy’s IP.

Are you sending the client IP in the X-Forwarded-For header? Depending on how your inbound requests are routed, you may have to do that for every reverse proxy in the path.

how did you fix?

Really bad thread-breaking comment spam under this post: lemmy.world/post/34824537

I was wondering about that. The same comment got spammed like 800 times.

Looks like it got cleaned but yeah I saw another one with 2k comments. Once eypu blocked the offending accounts, down to 20.

Threat actor behaviour.

You got me. I'm currently at the lubjanka shoving caviar with Iran and Piotr in my tank.

You really overestimate your importance lmao

I am retarded... WTF is u talking about, boy?

Time to grow up.

I’m not an admin or really THAT technically knowledgeable when it comes to underlying infrastructure of these things, but because you mention VPN users in reference to shared IPs - would it be worth considering and mentioning mobile users or users otherwise on CGNAT networks?

For example, TMobile Home Internet would result in multiple users being represented by a shared public IP. Maybe these exit nodes don’t have nearly the number of users under one IP in comparison to users behind a popular VPN services assigned IPs? I don’t know, but thought it might be relevant! I understand it’s also a tool geared toward combatting this spam and only so much can be considered against the improvement.

I never got IP-blocked by any instance.

.

One of these days your mom’s gonna stop paying for your Mullvad subscription. Whaddya gonna do then?

That’s a consideration, yeah, but they’d have to all be hitting lemmy.zip (your instance) and all from the same /32 IPv4 address.

(AFAIK) CG-NAT still uses port address translation so there’s an upper limit to the number of users behind one IP address. They also are distributed geographically. So everyone would need to be in the same area on the same instance to really have that be an issue.

The more likely scenario would be multiple people in the same IPv4 household using the same instance. But 20 comments per minute, divided by two people in the house would still be 10 comments per minute. That’s still probably more than they could reasonably do.

Edit: You mentioned T-Mobile internet. T-Mobile is pretty much all IPv6 with IPv4 connectivity via CG-NAT. lemmy.zip is also reachable over IPv6, so in that situation,it would try IPv6 first and CG-NAT likely wouldn’t even come into play.

that makes sense! thanks for the break down, and good to know!

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

Antiyank here. I agree, this is sloppy work. Give me some challenge idk

ok, I fucked up and now my instance is erroring :(

Fixed:

UPDATE local_site_rate_limit SET message = 999, message_per_second = 999 WHERE local_site_id = 1;Seems some others (or maybe alts? I don’t have the server IP logs) are getting in on the action. Saw this a minute ago

<img alt="" src="https://sh.itjust.works/pictrs/image/852f5920-9bff-44f8-a3a8-128e07cacbc9.png">

<img alt="" src="https://sh.itjust.works/pictrs/image/9548f58a-e9f4-47ff-b223-64255691e99f.png">