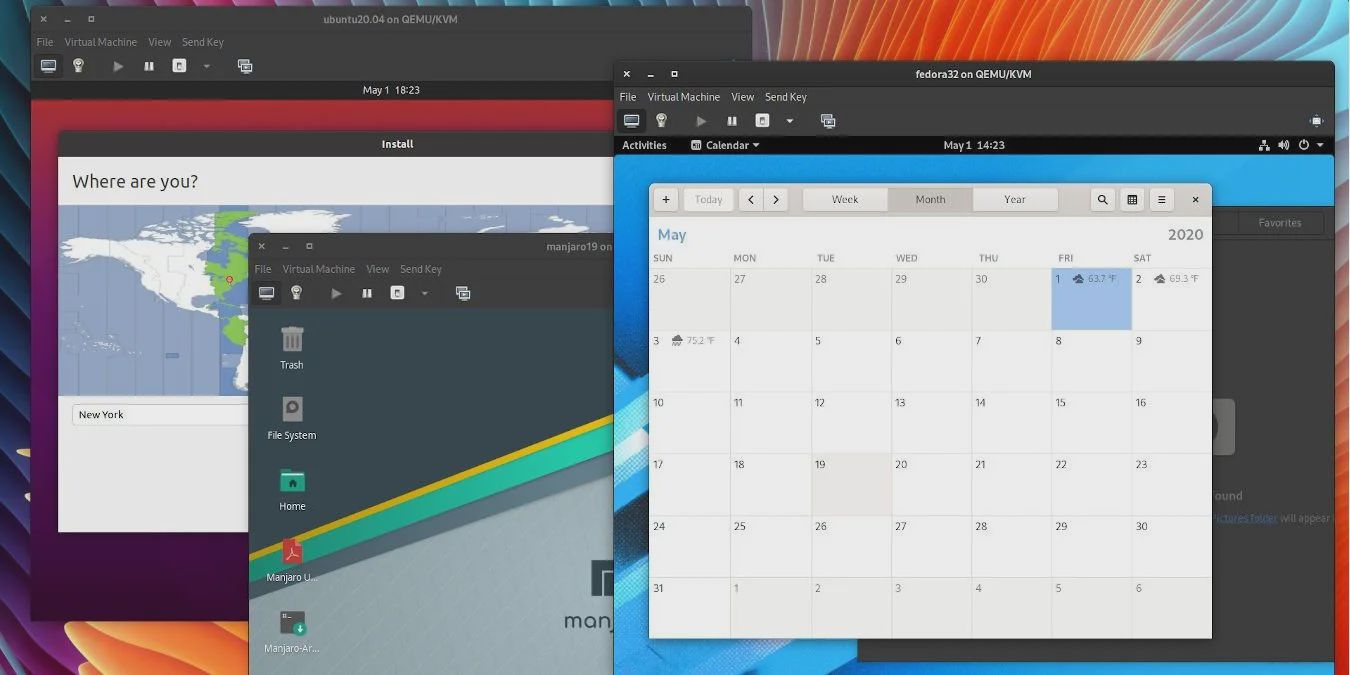

How to Get Started Using Virtual Machine Manager in Linux (Posted in response to Virtual box and VMware)

(www.maketecheasier.com)

from possiblylinux127@lemmy.zip to linux@lemmy.ml on 27 Apr 2024 23:20

https://lemmy.zip/post/14416287

from possiblylinux127@lemmy.zip to linux@lemmy.ml on 27 Apr 2024 23:20

https://lemmy.zip/post/14416287

If you run Windows make sure to install the virtio drivers

docs.fedoraproject.org/…/creating-windows-virtual…

threaded - newest

Is there an equivalent or something similar to “Use host i/o cache” that VirtualBox have? Last time I tried virt-manager the install time of the vm was incredibly slow because of the terrible write speed to my hdd. Vbox fixes that issue with the host i/o cache setting.

I think proxmox or qemu might interest you pve.proxmox.com/wiki/Performance_Tweaks#Disk_Cach…

Qemu pve.proxmox.com/wiki/Performance_Tweaks#Disk_Cach…

Usually setting the cache mode to “none” gives the best performance, assuming you’re using the virtio interface, instead of SATA/SCSI. This is a common mistake most newbies make when installing Windows, because virt-manager defaults to the latter, which gives poor perfomance. The same goes for the network btw, you’d want to use the virtio network interface instead of the emulated NIC. So before you install a Windows guest, make sure you change both those interfaces.

After changing the hardware interfaces, what you’d need to do (with Windows guests) is you’d need to supply the [virtio drivers](github.com/virtio-win/…/README.md, which you’ll need to provided to the Windows setup (via the virtio driver ISO) when prompted.

But if you’ve already installed Windows, you’ll need to install all the virtio drivers first and then update the interfaces after you’ve powered off the VM.

And in case you were wondering, this isn’t an issue with Linux guests, since virt-manager defaults to virtio hardware, and drivers aren’t an issue either.

What is the difference between Virtual Machine Manager and Proxmos?

Proxmox is an entire distro just for running virtual machines, with a web UI. Virt-manager is a program you install on a normal machine

Aah… Isn’t that what called a bare metal OS?

A bare metal OS is an OS running outside of a hypervisor. Virt-manager is a class 1 hypervisor that allows you to host guest operating systems. ( Run vms )

Hey sorry for the confusion. What I meant is Proxmos is considered as a bare metal hypervisor and Virt manager is a hypervisor inside an OS, right?

Technically no, both use kvm virtualization which is included in the Linux kernal, so both are “bare metal hypervisors” other wise know as class 1 hypervisors. Distinctions can be confusing 😂

Oh dear… I really thought I understood what bare metal means… But looks like this is beyond my tech comprehension

Bare metal is “kernel running on hardware” I think. KVM is a kernel feature, so the virtualization is done in kernel space (?) and on the hardware.

Well this can be a starting point of a rabbit hole. Time to spend hours reading stuff that I don’t really understand.

TL;DR: use what is in the kernel, without strange out of tree kernel modules like for VirtualBox, and use KVM, i.e. on fedora

virt-manager qemu qemu-kvm*Proxmox

Virtual manager is a application that connects to libvirtd in the back end. Think of it as a web browser or file manager for VMs.

Proxmox VE is an entire OS built for virtualization on dedicated servers. It also has support for clusters and live VM migrations between hosts. It is in essence a server OS designed to run in a data center (or homelab) of some kind. If is sort of equivalent to vSphere but they charge you per CPU socket for enterprise support and stability

Well this thread clearly established that I neither have technical knowledge and I don’t pay attention to spelling…

Jokes aside this is a good explanation. I have seen admins using vSphere and it kind of makes sense. I’m just starting to scratch the surface of homelab, and now started out with a raspberry pie. My dream is a full fledged self sustaining homelab.

If you ever want to get a Proxmox cluster go for 3-5 identical machines. I have a 3 totally different machines and it creates headaches

What kind of headaches are you having? I’ve been running two completely different machines in a cluster with a pi as a Qdevice to keep quorum and it’s been incredibly stable for years.

One device decided to be finicky and the biggest storage array is all on one system.

It really sucks you can’t do HA with BTRFS. It is more reliable than ZFS due to licensing

What’s the licensing part you mentioned? Can you elaborate a little?

OpenZFS is not GPL compatible so it can never be baked into the kernel in the same way BTRFS can. I’ve run into issues where I’ve needed to downgrade the kernel but if I do the system won’t boot.

Btrfs also doesn’t need any special software to work as it is completely native and baked in.

They both use KVM in the end, so they are both Type 1 hypervisors.

Loading the KVM kernel module turn your kernel into the bare metal hypervisor.

It’s really just Debian with more packages preinstalled, but yeah, the idea is that you have an OS that has the primary purpose of virtualizing other machines.

And a custom kernel with ZFS support

Oh right, they ship a modified kernel, didn’t think of this. I also didn’t know about the zfs thing, my homelab uses btrfs.

I’m also using btrfs, but I originally wanted ZFS before seeing that it was only available through FUSE on my distro.

That’s why I even noticed ZFS was one of the features of Proxmox :)

Apples and oranges really. They underlying tech is the same but Proxmox is an entire platform

What? Is there some new controversy going on ?

Oh, people recommend VirtualBox all the time and it’s awful.

Ah well, I’ve used Virtualbox, Vmware and KVM and I found them all useful for my purposes. Vmware is very slick and has an edge on easy Gfx acceleration for Windows guests but since they’re now owned by Broadcom that might become a problem.

I’m happy with Virtualbox on my desktop and KVM on a few servers. I don’t really care to take sides.

What’s awful about it? Genuinely asking

Performance compared to KVM is really poor. And it handles graphics poorly.

I’ve tried QEMU for this, I’ll try KVM one of these days