Study (N=16) finds AI (Cursor/Claude) slows development

from jay@beehaw.org to programming@programming.dev on 11 Jul 2025 14:29

https://beehaw.org/post/21044806

from jay@beehaw.org to programming@programming.dev on 11 Jul 2025 14:29

https://beehaw.org/post/21044806

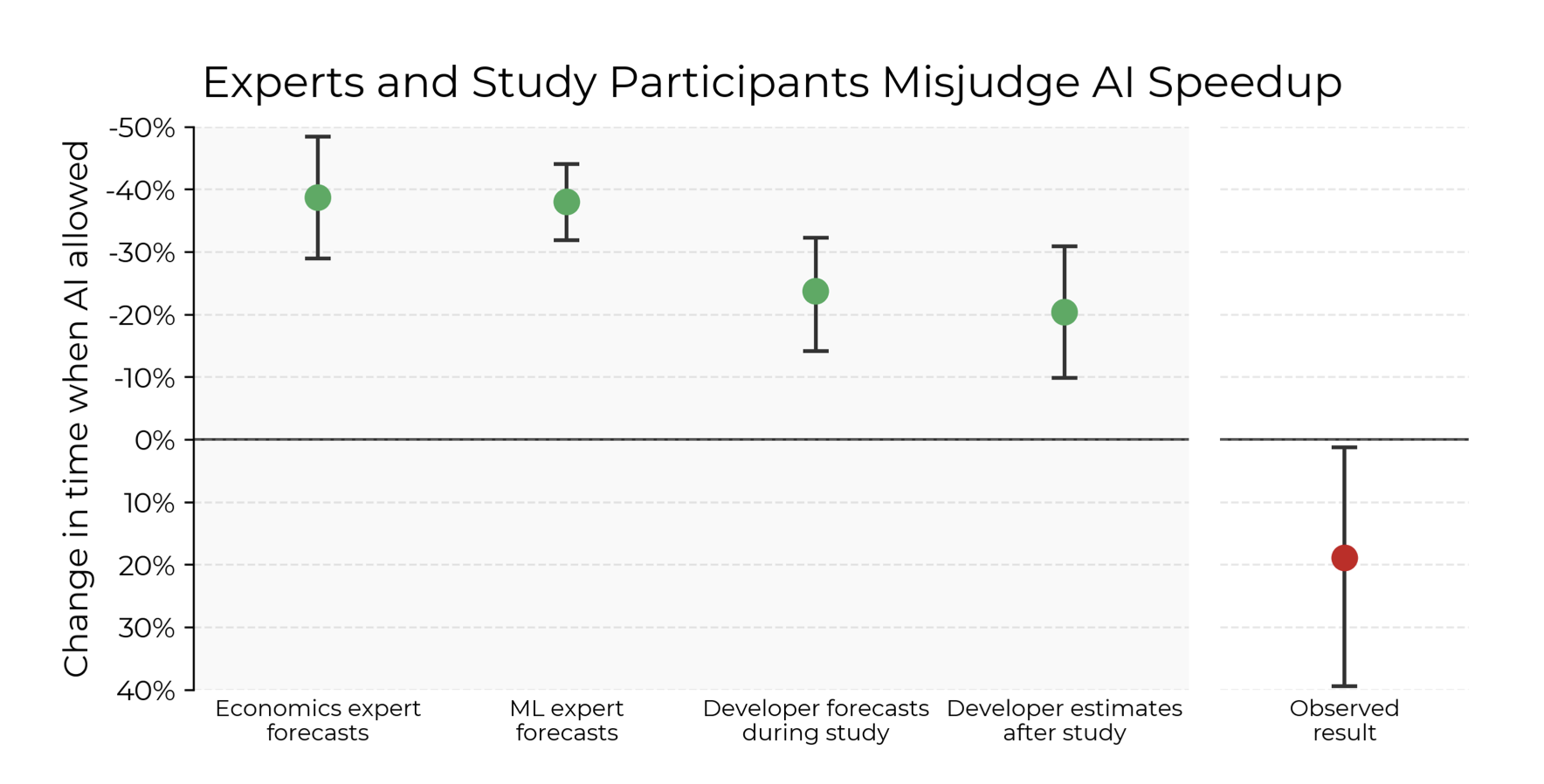

Before starting tasks, developers forecast that allowing AI will reduce completion time by 24%. After completing the study, developers estimate that allowing AI reduced completion time by 20%. Surprisingly, we find that allowing AI actually increases completion time by 19%

N = 16

threaded - newest

N=16 developers

Haha yes, I should have made that clear, thanks

You’re acting like this is a gotcha when it’s actually probably the most rigorous study of AI tool productivity change to date.

It definitely is… But it’s possible to be the most rigorous study and also not really prove anything. Proving this sort of stuff is ridiculously hard and expensive. We don’t have proofs for even the most obvious things in programming, like that comments and good variable naming help comprehension. Sometimes studies even find the opposite.

The more important detail is that it's 16 experienced developers. If there's going to be an advantage with AI development tools, it's going to most likely be seen with junior devs with a much wider gap between current and peak performance. This was my first thought reading the article, and it's called out in the study.

That’s my issue with AI. I go to AI after my skills, the documentation and google failed me. Then I go to ChatGPT to get lied to, because ChatGPT doesn’t know either.

And almost without fail, AI doesn’t help me there.

The only thing where AI helps is AI autocomplete in die IDE, if I am doing something very simple and monotonous, then it helps me to sometimes reduce my typing speed a little bit compared to regular autocomplete.

But typing time is like 0.5% of the time I spend developing stuff.

I don’t think that’s true. In fact most people say the opposite - AI doesn’t help junior devs because they can’t recognise when it’s bullshitting. I don’t really believe that either - that’s just ego talking. I expect it helps people of all experience levels fairly equally, but only with tasks that are relatively simple. It’s not like senior engineers never do those though.

Have anything behind that? The paper we're discussing has 4 citations in agreement, so I'm not so sure that most people say the opposite.

What do you mean? I’ve seen people say that all the time on HN. No I’m not going to go and search for comments.

I meant actual data. You're refuting a claim backed by several cited studies in the OP.

Oh you mean when I said this?

No I don’t have actual data, just direct personal experience of asking AI to do simple and complex tasks - it does much better on simple tasks, especially in very widely discussed domains (HTML, CSS, JavaScript, Python etc.) Ask it any SystemVerilog stuff and it gets it wrong almost every time annoyingly!

(minutes) earlierpost linking the studyOh, thanks! I thought Lemmy would warn me if I tried to submit a duplicate URL.Oh nevermind, that one looks like it was posted after mine. (It has a higher ID, too.)

It’s not a duplicate URL. You posted an image, they posted a link to the study.

I mainly wanted to give the additional context and discussion, more so than say “has already posted”.

I assume I must have compared a modified date or sth, dunno. Misled by it being shown further down in my feed.

Study (N=16) finds absolutely nothing because their sample size is too small to meaningfully substantiate any conclusion.