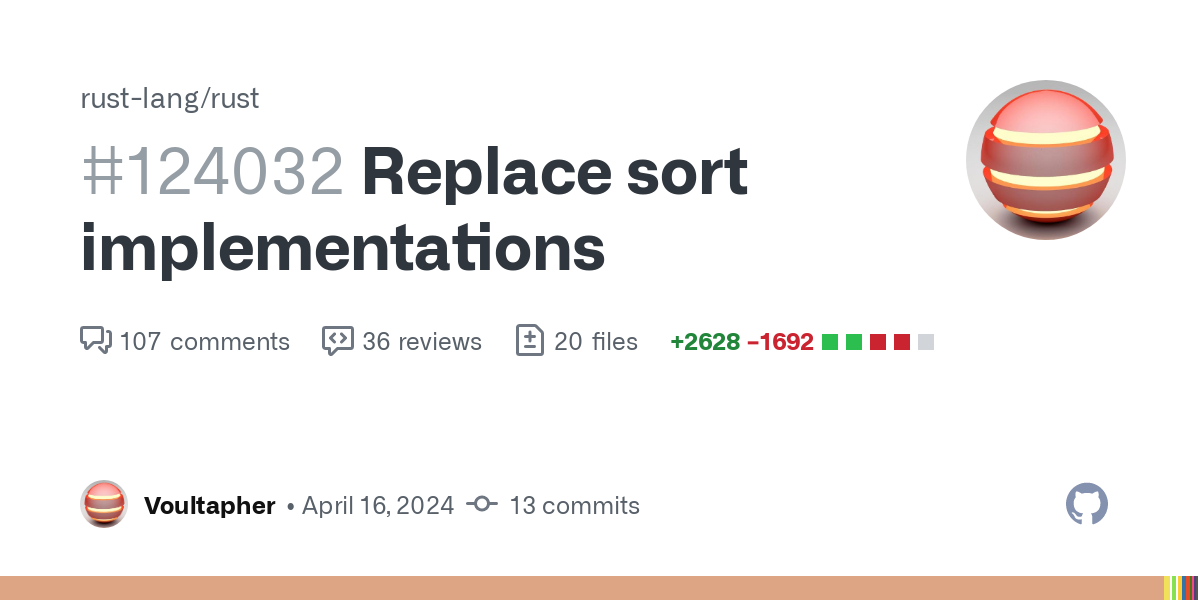

Rust just merged two new very fast sort implementations into stdlib

(github.com)

from farcaster@lemmy.world to rust@programming.dev on 22 Jun 2024 00:17

https://lemmy.world/post/16790671

from farcaster@lemmy.world to rust@programming.dev on 22 Jun 2024 00:17

https://lemmy.world/post/16790671

name diff % speedup slice::sort_large_random -65.49% x 2.90 slice::sort_large_strings -37.75% x 1.61 slice::sort_medium_random -47.89% x 1.92 slice::sort_small_random 11.11% x 0.90 slice::sort_unstable_large_random -47.57% x 1.91 slice::sort_unstable_large_strings -25.19% x 1.34 slice::sort_unstable_medium_random -22.15% x 1.28 slice::sort_unstable_small_random -15.79% x 1.19

threaded - newest

I remember some “glidesort” also being introduced. Wonder what happened to that

From what I understand as I skimmed over the stable sort analysis (github.com/Voultapher/sort-research-rs/…/text.md), it lost out against driftsort.

orlp invented PDQSort and Glidesort. He collaborated with Voultapher on Driftsort.

Driftsort is like a successor to Glidesort.

Glidesort had some issues that prevented it from being merged into std, and which are addressed in Driftsort. IIRC it had something to do with codegen bloat.

Does the Rust compiler use their std sort algorithms, or does it already use specialized ones? If the former, it would be a great side-effect if the compiler itself receives additional speed ups because of this.

Alas, on the whole the compiler slowed down as a result of this. I think it’s a worthy tradeoff though.

The post mentioned that the introduction of these new algorithms brings compile-time improvements too, so how should I see this? I assumed it meant that compiling applications that use sorting would speed up, but that seems like a meaningless improvement if overall compilation times have regressed. Or do you mean compiling the compiler has become slower?

I assume you mean the first post of the PR? I’d assume it’s simply outdated (or might not have been true to begin with). See github.com/rust-lang/rust/pull/124032#issuecommen… for the perf run with this PR, it’s showing quite a bit of regression.

Yeah, it was the first line of the linked PR:

It was also repeated a few paragraphs later that the motivation for the changes was both runtime and compile time improvements. So a little bit bumped to hear the compile time impact wasn’t as good as the authors hoped apparently. I’m not even sure I fully endorse the tradeoff, because it seems the gains, while major, only affect very select use cases, while the regressions seem to affect everyone and hurt in an area that is already perceived as a pain point. But oh well, the total regression is still minor so I guess we’ll live with it.

I did not read the whole conversation, but sorting seems a very common usecase (not mine, but seems to me a lot of people sort data), so this seems quite a broad improvement to me.

Note though, as is mentioned in the issue, that the survey showed people still prioritize runtime performance over compilation performance in general, so this tradeoff seems warranted.

It’s not unheard of that regressions can be unmade later on, so here’s hoping :)

Yeah, sorting is definitely a common use case, but note it also didn’t improve every sorting use case. Anyway, even if I’m a bit skeptical I trust the Rust team that they don’t take these decisions lightly.

But the thing that lead to my original question was: if the compiler itself uses the std sorting internally, there’s also additional reason to hope that it might have transitive performance benefits. So even if compiling the Rust compiler with this PR was actually slower, compiling again with the resulting compiler could be faster since the resulting compiler benefits from faster sorting. So yeah, fingers crossed 🤞

I would have assumed the benchmark suite accounts for that, otherwise the results aren’t quite as meaningfull really. Which ties back you your 2nd senctence: I certainly trust the rust team more than myself on these things :)