I was right, it is

from Lukstru@fedia.io to science_memes@mander.xyz on 28 Sep 10:04

https://fedia.io/m/science_memes@mander.xyz/t/2778665

from Lukstru@fedia.io to science_memes@mander.xyz on 28 Sep 10:04

https://fedia.io/m/science_memes@mander.xyz/t/2778665

Watched Primer’s new video (www.youtube.com/watch?v=GkiITbgu0V0 watch it!) and stumbled over this gem

threaded - newest

Took me 17 minutes to get the joke. Good video though.

can I get my instant gratification please?

Sure, here’s a TLDW.

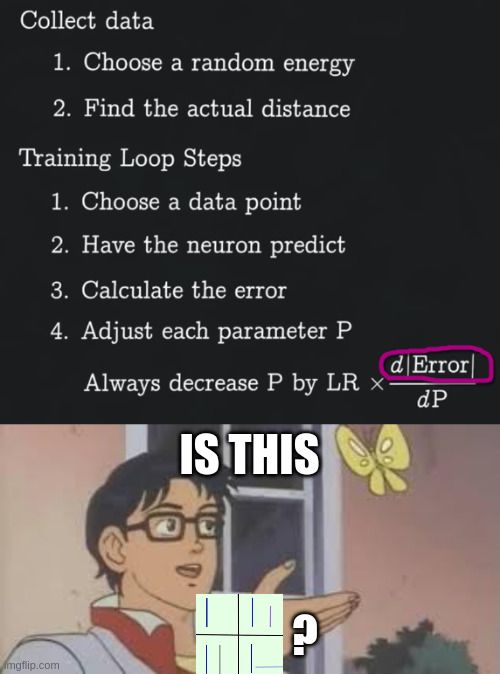

Imagine you’re training an AI model. You feed it data and test if it comes up with a good answer. Of course it doesn’t do that right away, thats why you.re training it. You have to correct it.

If you correct the model by correcting the errors, you get overcompensation problems. If you correct it on the differences between the errors, you get a much better correction.

The term for that is LOSS. You correct on LOSS in stead of on pure ERROR.

haha, loss

I watched that video mere hours ago!

Gradient descent?It’s loss.<img alt="" src="https://lemmy.world/pictrs/image/db669661-f77f-489e-9297-56c1105a1c4b.png">