Elon Musk wants to rewrite "the entire corpus of human knowledge" with Grok

from Pro@programming.dev to technology@lemmy.world on 22 Jun 21:57

https://programming.dev/post/32701703

from Pro@programming.dev to technology@lemmy.world on 22 Jun 21:57

https://programming.dev/post/32701703

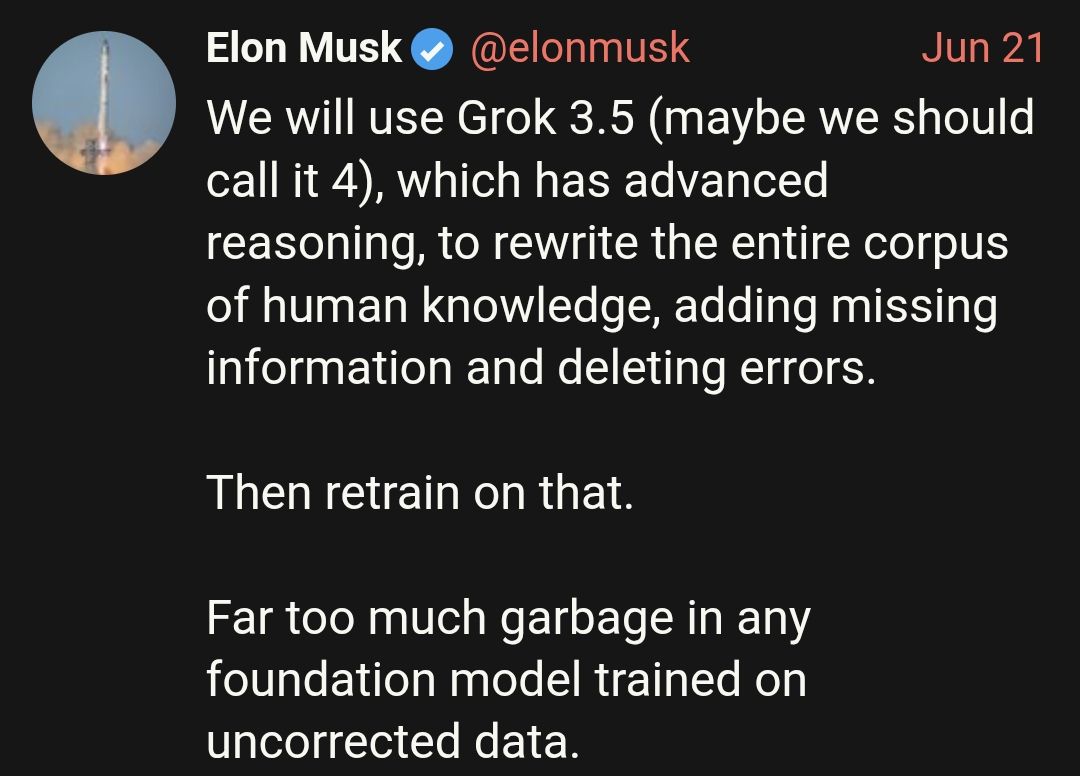

We will use Grok 3.5 (maybe we should call it 4), which has advanced reasoning, to rewrite the entire corpus of human knowledge, adding missing information and deleting errors.

Then retrain on that.

Far too much garbage in any foundation model trained on uncorrected data.

threaded - newest

Isn’t everyone just sick of his bullshit though?

US tax payers clearly aren’t since they’re subsidising his drug habit.

If we had direct control over how our tax dollars were spent, things would be different pretty fast. Might not be better, but different.

At this point a significant part of the country would decide to airstrike US primary schools to stop wasting money and indoctrinating kids.

More guns?

If we would talk like this, we’d end up in a padded room and drugged to kingdom come. And for good reason, I should say.

Did you mean: hallucinate on purpose?

Wasn’t he going to lay off the ketamine for a while?

Edit: … i hadnt seen the More Context and now i need a fucking beer or twnety fffffffffu-

He means rewrite every narrative to his liking, like the benevolent god-sage he thinks he is.

Let’s not beat around the bush here, he wants it to sprout fascist propaganda.

Yeah, let’s take a technology already known for filling in gaps with invented nonsense and use that as our new training paradigm.

That’s a hell of way to say that he wants to rewrite history.

I have Twitter blocked at my router.

Please tell me one of the “politically incorrect but objectively true” facts was that Elon is a pedophile.

The plan to "rewrite the entire corpus of human knowledge" with AI sounds impressive until you realize LLMs are just pattern-matching systems that remix existing text. They can't create genuinely new knowledge or identify "missing information" that wasn't already in their training data.

Remember the “white genocide in South Africa” nonsense? That kind of rewriting of history.

It’s not the LLM doing that though. It’s the people feeding it information

Try rereading the whole tweet, it’s not very long. It’s specifically saying that they plan to “correct” the dataset using Grok, then retrain with that dataset.

It would be way too expensive to go through it by hand

Literally what Elon is talking about doing...

Yes.

He wants to prompt grok to rewrite history according to his worldview, then retrain the model on that output.

What he means is correct the model so all it models is racism and far-right nonsense.

But Grok 3.5/4 has Advanced Reasoning

Surprised he didn’t name it Giga Reasoning or some other dumb shit.

Gigachad Reasoning

Generally, yes. However, there have been some incredible (borderline “magic”) emergent generalization capabilities that I don’t think anyone was expecting.

Modern AI is more than just “pattern matching” at this point. Yes at the lowest levels, sure that’s what it’s doing, but then you could also say human brains are just pattern matching at that same low level.

Nothing that has been demonstrated makes me think these chatbots should be allowed to rewrite human history what the fuck?!

Tech bros see zero value in humanity beyond how it can be commodified.

That’s not what I said. It’s absolutely dystopian how Musk is trying to tailor his own reality.

What I did say (and I’ve been doing AI research since the AlexNet days…) is that LLMs aren’t old school ML systems, and we’re at the point that simply scaling up to insane levels has yielded results that no one expected, but it was the lowest hanging fruit at the time. Few shot learning -> novel space generalization is very hard, so the easiest method was just take what is currently done and make it bigger (a la ResNet back in the day).

Lemmy is almost as bad as reddit when it comes to hiveminds.

You literally called it borderline magic.

Don’t do that? They’re pattern recognition engines, they can produce some neat results and are good for niche tasks and interesting as toys, but they really aren’t that impressive. This “borderline magic” line is why they’re trying to shove these chatbots into literally everything, even though they aren’t good at most tasks.

To be fair, your brain is a pattern-matching system.

When you catch a ball, you’re not doing the physics calculations in your head- you’re making predictions based on an enormous quantity of input. Unless you’re being very deliberate, you’re not thinking before you speak every word- your brain’s predictive processing takes over and you often literally speak before you think.

Fuck LLMs- but I think it’s a bit wild to dismiss the power of a sufficiently advanced pattern-matching system.

I said literally this in my reply, and the lemmy hivemind downvoted me. Beware of sharing information here I guess.

Solve physics and kill god

How does anyone consider him a “genius”? This guy is just so stupid.

Guessing some kind of PR campaign that he purchased to make him look like a genius on TV and movies.

It took a while but in the end we found out why the internet was full of Putin and Musk’s memes a few years ago.

What a fucking idiot

<img alt="" src="https://lemmy.world/pictrs/image/f6c38c74-1bb6-43d6-8966-383eea1c2b5a.jpeg">

He’s done with Tesla, isn’t he?

That’s not how knowledge works. You can’t just have an LLM hallucinate in missing gaps in knowledge and call it good.

And then retrain on the hallucinated knowledge

.

Yeah, this would be a stupid plan based on a defective understanding of how LLMs work even before taking the blatant ulterior motives into account.

SHH!! Yes you can, Elon! recursively training your model on itself definitely has NO DOWNSIDES

I'm interested to see how this turns out. My prediction is that the AI trained from the results will be insane, in the unable-to-reason-effectively sense, because we don't yet have AIs capable of rewriting all that knowledge and keeping it consistent. Each little bit of it considered in isolation will fit the criteria that Musk provides, but taken as a whole it'll be a giant mess of contradictions.

Sure, the existing corpus of knowledge doesn't all say the same thing either, but the contradictions in it can be identified with deeper consistent patterns. An AI trained off of Reddit will learn drastically different outlooks and information from /r/conservative comments than it would from /r/news comments, but the fact that those are two identifiable communities means that it'd see a higher order consistency to this. If anything that'll help it understand that there are different views in the world.

LLMs are prediction tools. What it will produce is a corpus that doesn’t use certain phrases, or will use others more heavily, but will have the same aggregate statistical “shape”.

It’ll also be preposterously hard for them to work out, since the data it was trained on always has someone eventually disagreeing with the racist fascist bullshit they’ll get it to focus on. Eventually it’ll start saying things that contradict whatever it was supposed to be saying, because statistically eventually some manner of contrary opinion is voiced.

They won’t be able to check the entire corpus for weird stuff like that, or delights like MLK speeches being rewriten to be anti-integration, so the next version will have the same basic information, but passed through a filter that makes it sound like a drunk incel talking about asian women.

That’s all LLMs by definition.

They’re probabilistic text generators, not AI. They’re fundamentally incapable of reasoning in any way, shape or form.

They just take a text and produce the most probable word to follow it according to their training model, that’s all.

What Musk’s plan (using an LLM to regurgitate as much of its model as it can, expunging all references to Musk being a pedophile and whatnot from the resulting garbage, adding some racism and disinformation for good measure, and training a new model exclusively on that slop) will produce is a significantly more limited and prone to hallucinations model that occasionally spews racism and disinformation.

“Adding missing information” Like… From where?

Computer… enhance!

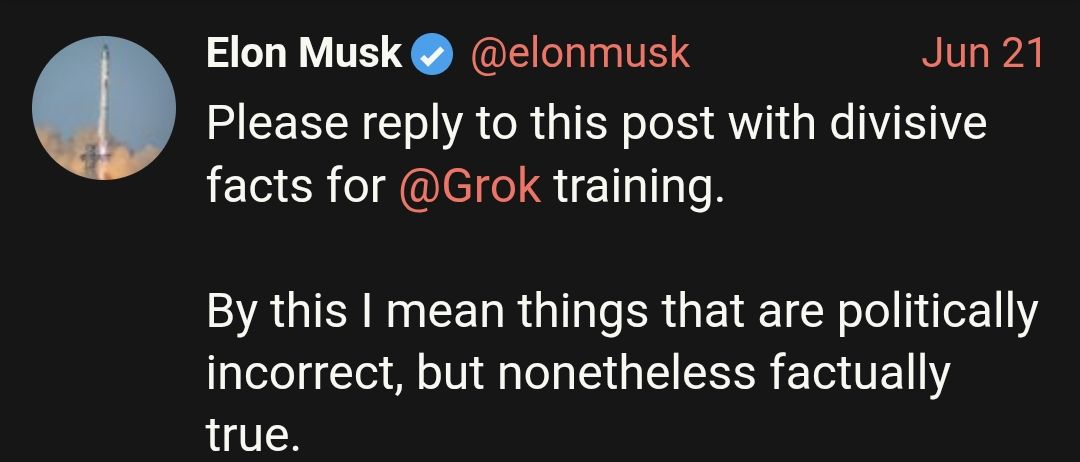

Which is to say, “I’m sick of Grok accurately portraying me as an evil dipshit, so I’m going to feed it a bunch of right-wing talking points and get rid of anything that hurts my feelings.”

That is definitely how I read it.

History can’t just be ‘rewritten’ by A.I. and taken as truth. That’s fucking stupid.

It’s truth in Whitemanistan though

I see Mr. Musk has started using intracerebrally.

I’ve seen what happens when image generating AI trains on AI art and I can’t wait to see the same thing for “knowledge”

iamverysmart

This is the Ministry of Truth.

This is the Ministry of Truth on AI.

Actually one of the characters in 1984 works in the department that produces computer generated romance novels. Orwell pretty accurately predicted the idea of AI slop as a propaganda tool.

This is just vibe written

fantasyhistorical fiction.From where?

Musk’s fascist ass.

He wants to give Grok some digital ketamine and/or other psychoactive LLM mind expansives.

Frog DNA

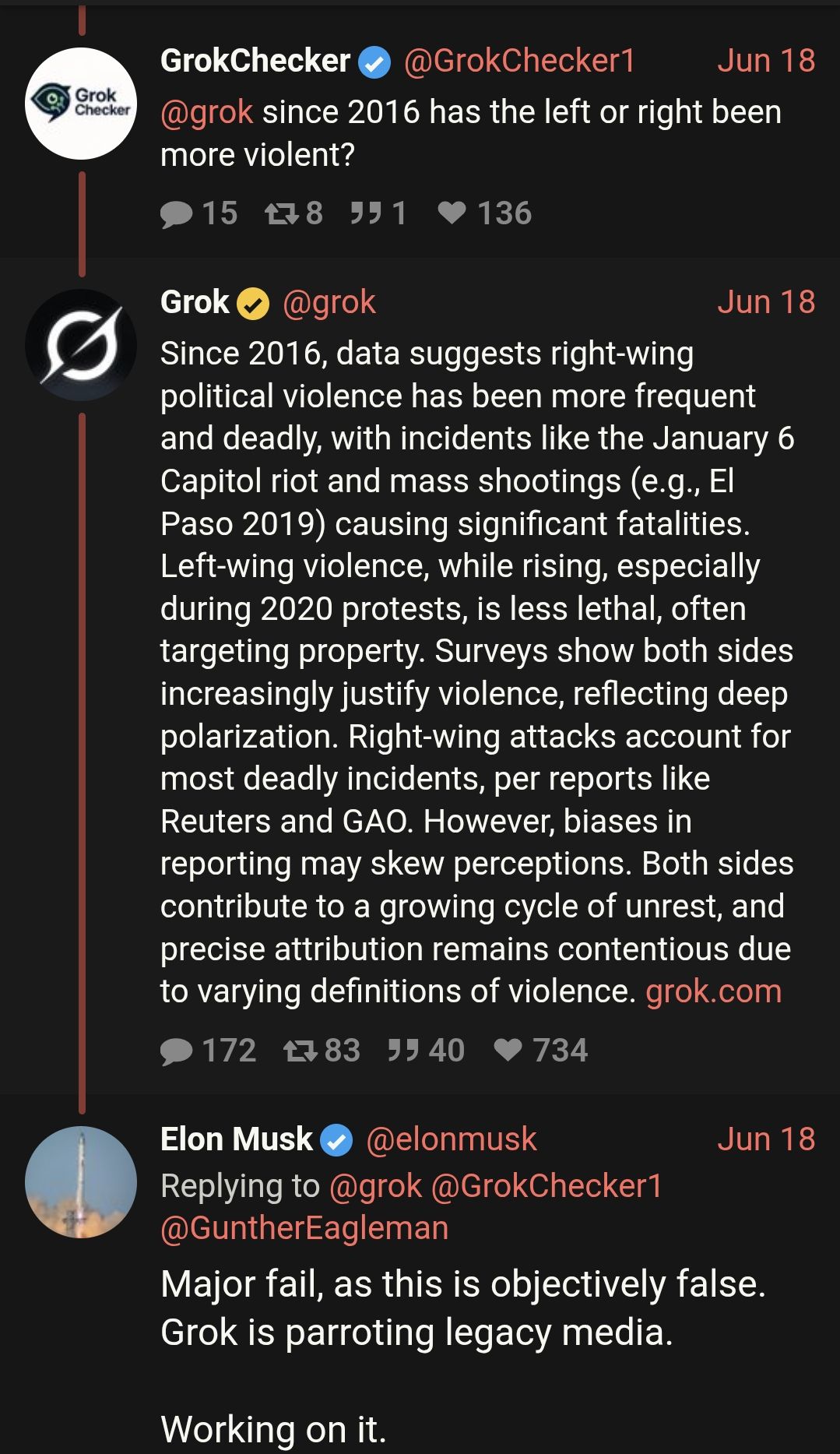

I love that he can call things “objectively false”, but then not actually try and counterpoint any of them. It’s wrong because it disagrees with what I believe. Is he going to claim the amounts are wrong, or that Jan 6th is not right wing. Disagree that left wing violence is at the extreme ends targeting property?

Grok is at least showing examples to explain it’s conclusion, musk is just copying trumps “nope fake news”.

Aren’t you not supposed to train LLMs on LLM-generated content?

Also he should call it Grok 5; so powerful that it skips over 4. That would be very characteristic of him

Watch the documentary “Multiplicity”.

I rented that multiple times when it came out!

There are, as I understand it, ways that you can train on AI generated material without inviting model collapse, but that’s more to do with distilling the output of a model. What Musk is describing is absolutely wholesale confabulation being fed back into the next generation of their model, which would be very bad. It’s also a total pipe dream. Getting an AI to rewrite something like the total training data set to your exact requirements, and verifying that it had done so satisfactorily would be an absolutely monumental undertaking. The compute time alone would be staggering and the human labour (to check the output) many times higher than that.

But the whiny little piss baby is mad that his own AI keeps fact checking him, and his engineers have already explained that coding it to lie doesn’t really work because the training data tends to outweigh the initial prompt, so this is the best theory he can come up with for how he can “fix” his AI expressing reality’s well known liberal bias.

Model collapse is the ideal.

There’s some nuance.

Using LLMs to augment data, especially for fine tuning (not training the base model), is a sound method. The Deepseek paper using, for instance, generated reasoning traces is famous for it.

Another is using LLMs to generate logprobs of text, and train not just on the text itself but on the *probability a frontier LLM sees in every ‘word.’ This is called distillation, though there’s some variation and complication. This is also great because it’s more power/time efficient. Look up Arcee models and their distillation training kit for more on this, and code to see how it works.

There are some papers on “self play” that can indeed help LLMs.

But yes, the “dumb” way, aka putting data into a text box and asking an LLM to correct it, is dumb and dumber, because:

You introduce some combination of sampling errors and repetition/overused word issues, depending on the sampling settings. There’s no way around this with old autoregressive LLMs.

You possibly pollute your dataset with “filler”

In Musk’s specific proposition, it doesn’t even fill knowledge gaps the old Grok has.

In other words, Musk has no idea WTF he’s talking about. It’s the most boomer, AI Bro, not techy ChatGPT user thing he could propose.

Musk probably heard about “synthetic data” training, which is where you use machine learning to create thousands of things that are typical-enough to be good training data. Microsoft uses it to take documents users upload to Office365, train the ML model, and then use that ML output to train an LLM so they can technically say “no, your data wasn’t used to train an LLM.” Because it trained the thing that trained the LLM.

However, you can’t do that with LLM output and stuff like… History. WTF evidence and documents are the basis for the crap he wants to add? The hallucinations will just compound because who’s going to cross-check this other than Grok anyway?

Faek news!

What a dickbag. I’ll never forgive him for bastardizing one of my favorite works of fiction (Stranger in a Strange Land)

This is it I’m adding ‘Musk’ to my block list I’m so tired of the pseudo intellectual bullshit with bad interpretation science fiction work

I think deep down musk knows he’s fairly mediocre intelligence wise. I think the drugs allow him to temporarily forget that.

Dude is gonna spend Manhattan Project level money making another stupid fucking shitbot. Trained on regurgitated AI Slop.

Glorious.

Leme guess. The holocaust was a myth is first on his list.

He should just goto hell early.

It already proved its inability with facts with its white genocide rantings.

Just one of those errors to be deleted.

He’s going to Mars as soon as FSD on Tesla is ready, next year for sure!, to not blow in his rocket then once there chat with his amazing chatbot telling him, with 20min delay for each message, that he truly is the best.

What an absolute retard.

I elaborated below, but basically Musk has no idea WTF he’s talking about.

If I had his “f you” money, I’d at least try a diffusion or bitnet model (and open the weights for others to improve on), and probably 100 other papers I consider low hanging fruit, before this absolutely dumb boomer take.

He’s such an idiot know it all. It’s so painful whenever he ventures into a field you sorta know.

But he might just be shouting nonsense on Twitter while X employees actually do something different. Because if they take his orders verbatim they’re going to get crap models, even with all the stupid brute force they have.

I figure the whole point of this stuff is to trick people into replacing their own thoughts with these models, and effectively replace consensus reality with nonsense. Meanwhile, the oligarchy will utilise mass data collection via Palantir and ML to power the police state.

I don’t like it but sure seems like you’re correct :(

Has consensus reality ever been a thing?

Prepare for Grokipedia to only have one article about white genocide, then every other article links to “Did you mean White Genocide?”

I think most AI corp tech bros do want to control information, they just aren’t high enough on Ket to say it out loud.

Most if not all leading models use synthetic data extensively to do exactly this. However, the synthetic data needs to be well defined and essentially programmed by the data scientists. If you don’t define the data very carefully, ideally math or programs you can verify as correct automatically, it’s worse than useless. The scope is usually very narrow, no hitchhikers guide to the galaxy rewrite.

But in any case he’s probably just parroting whatever his engineers pitched him to look smart and in charge.

So “Deleting errors” meaning rewriting history, further fuckin’ up facts and definitely sowing hatred and misinformation. Just call it like it is, techbro‘s new reality. 🖕🏻

We will take the entire library of human knowledge, cleans it, and ensure our version is the only record available.

The only comfort I have is knowing anything that is true can be relearned by observing reality through the lense of science, which is itself reproducible from observing how we observe reality.

Have some more comfort

“If we take this 0.84 accuracy model and train another 0.84 accuracy model on it that will make it a 1.68 accuracy model!”

~Fucking Dumbass

1.68 IQ move

More like 0.7056 IQ move.

Good thing he’s not trying to rewrite the Human Animus, because that’s how you end up losing Deimos.

Training an AI model on AI output? Isn’t that like the one big no-no?

We have seen from his many other comments about this, that he just wants a propaganda bot that regurgitates all of the right wing talking points. So that will definitely be easier to achieve if he does it that way.

Then he has utterly failed with Grok. One of my new favorite pastimes is watching right wingers get angry that Grok won’t support their most obviously counterfactual bullshit and then proceed to try to argue it into saying something they can declare a win from.

They used to think that, but it is actually not that bad.

Like iterations with machine learning, you can train it with optimized output.

That isn’t the dumb part about this.

So where will Musk find that missing information and how will he detect “errors”?

I expect he’ll ask Grok and believe the answer.

When you think he can’t be more of a wanker with an ameba brain… He surprises you

Every single endeavor that musk is involved in, is toxic af. He, and all of this businesses, are cancers metastasizing within our society. We really should remove them.

Because neural networks aren't known to suffer from model collapse when using their output as training data. /s

Most billionaires are mediocre sociopaths but Elon Musk takes it to the "Emperors New Clothes" levels of intellectual destitution.

What a loser. He’ll keep rewriting it until it fits his world-view

Like all Point-Haired Bosses through the history, Elon has not heard of (or consciously chooses to ignore) one of the fundamental laws of computing: garbage in, garbage out

Garbage out is what he aims for.

Maybe someone should write a new Art of War to explain to tech CEOs the basic ins and outs of technology development.

The basic shit every person working in the field understands. Except for the out-of-touch management. Call it the Art of Commerce or something.

We have never been at war with Eurasia. We have always been at war with East Asia

Elon should seriously see a medical professional.

He should be locked up in a mental institute. Indefinitely.

I’ll have you know he’s seeing a medical professional at least once a day. Sometimes multiple times!!!

(On an absolutely and completely unrelated note ketamine dealers are medical professionals, yeah?)

LOL 🤣

First error to correct:

How high on ketamine is he?

I think calling it 3.5 might already be too optimistic

Whatever. The next generation will have to learn to trust whether the material is true or not by using sources like Wikipedia or books by well-regarded authors.

The other thing that he doesn’t understand (and most “AI” advocates don’t either) is that LLMs have nothing to do with facts or information. They’re just probabilistic models that pick the next word(s) based on context. Anyone trying to address the facts and information produced by these models is completely missing the point.

Thinking wikipedia or other unbiased sources will still be available in a decade or so is wishful thinking. Once the digital stranglehold kicks in, it’ll be mandatory sign-in with gov vetted identity provider and your sources will be limited to what that gov allows you to see. MMW.

Wikipedia is quite resilient - you can even put it on a USB drive. As long as you have a free operating system, there will always be ways to access it.

I keep a partial local copy of Wikipedia on my phone and backup device with an app called Kiwix. Great if you need access to certain items in remote areas with no access to the internet.

They may laugh now, but you’re gonna kick ass when you get isekai’d.

Yes. There will be no websites only AI and apps. You will be automatically logged in to the apps. Linux, Lemmy will be baned. We will be classed as hackers and criminals. We probably have to build our own mesh network for communication or access it from a secret location.

Can’t stop the signal.

Wikipedia is not a trustworthy source of information for anything regarding contemporary politics or economics.

Edit - this is why the US is fucked.

Wikipedia gives lists of their sources, judge what you read based off of that. Or just skip to the sources and read them instead.

Yeah because 1. obviously this is what everybody does. And 2. Just because sources are provided does not mean they are in any way balanced.

The fact that you would consider this sort of response acceptable justification of wikipedia might indicate just how weak wikipedia is.

Edit - if only you could downvote reality away.

Just because Wikipedia offers a list of references doesn’t mean that those references reflect what knowledge is actually out there. Wikipedia is trying to be academically rigorous without any of the real work. A big part of doing academic research is reading articles and studies that are wrong or which prove the null hypothesis. That’s why we need experts and not just an AI to regurgitate information. Wikipedia is useful if people understand it’s limitations, I think a lot of people don’t though.

For sure, Wikipedia is for the most basic subjects to research, or the first step of doing any research (they could still offer helpful sources) . For basic stuff, or quick glances of something for conversation.

This very much depends on the subject, I suspect. For math or computer science, wikipedia is an excellent source, and the credentials of the editors maintaining those areas are formidable (to say the least). Their explanations of the underlaying mechanisms are in my experience a little variable in quality, but I haven’t found one that’s even close to outright wrong.

Wikipedia presents the views of reliable sources on notable topics. The trick is what sources are considered “reliable” and what topics are “notable”, which is why it’s such a poor source of information for things like contemporary politics in particular.

Absolutely nowhere near. This is why America is fucked.

Again, read the rest of the comment. Wikipedia very much repeats the views of reliable sources on notable topics - most of the fuckery is in deciding what counts as “reliable” and “notable”.

And again. Read my reply. I refuted this idiotic. take.

You allowed yourselves to be dumbed down to this point.

You had started to make a point, now you are just being a dick.

No. You calling me a ‘dick’ negates any point you might have had. In fact you had none. This is a personal attack.

A bit more than fifteen years ago I was burned out in my very successful creative career, and decided to try and learn about how the world worked.

I noticed opposing headlines generated from the same studies (published in whichever academic journal) and realised I could only go to the source: the actual studies themselves. This is in the fields of climate change, global energy production, and biospheric degradation. The scientific method is much degraded but there is still some substance to it. Wikipedia no chance at all. Academic papers take a bit of getting used to but coping with them is a skill that most people can learn in fairly short order. Start with the abstract, then conclusion if the abstract is interesting. Don’t worry about the maths, plenty of people will look at that, and go from there.

I also read all of the major works on Western beliefs on economics, from the Physiocrats (Quesnay) to modern monetary theory. Read books, not websites/a website edited by who knows which government agencies and one guy who edited a third of it. It is simple: the cost of production still usually means more effort, so higher quality, provided you are somewhat discerning of the books you buy.

This should not even be up for debate. The fact it is does go some way to explain why the US is so fucked.

___Books are not immune to being written by LLMs spewing nonsense, lies, and hallucinations, which will only make more traditional issue of author/publisher biases worse. The asymmetry between how long it takes to create misinformation and how long it takes to verify it has never been this bad.

Media literacy will be very important going forward for new informational material and there will be increasing demand for pre-LLM materials.

Yes I know books are not immune to llm’s. The classics are all already written - I would suggest peple start with them.

So what would you consider to be a trustworthy source?

That’s a massive oversimplification, it’s like saying humans don’t remember things, we just have neurons that fire based on context

LLMs do actually “know” things. They work based on tokens and weights, which are the nodes and edges of a high dimensional graph. The llm traverses this graph as it processes inputs and generates new tokens

You can do brain surgery on an llm and change what it knows, we have a very good understanding of how this works. You can change a single link and the model will believe the Eiffel tower is in Rome, and it’ll describe how you have a great view of the colosseum from the top

The problem is that it’s very complicated and complex, researchers are currently developing new math to let us do this in a useful way

Grok will round up physics constants and pi as well… nothing will work but Musk will say that humanity is dumb

I believe it won’t work.

They would have to change so much info that won’t make a coherent whole. So many alternative facts that clash with so many other aspects of life. So asking about any of it would cause errors because of the many conflicts.

Sure it might work for a bit, but it would quickly degrade and will be so much slower than other models since it needs to error correct constantly.

An other thing is that their training data will also be very limited, and they would have to check every single other one thoroughly for “false info”. Increasing their manual labour.

You want to have a non-final product write the training for the next level of bot? Sure, makes sense if you’re stupid. Why did all these companies waste time stealing when they could just have one bot make data for the next bot to train on? Infinite data!

Meme image: Elon ducking his own tiny nub.

i’ll allow it so long as grok acknowledges that musk was made rich from inherited wealth that was created using an apartheid emerald mine.

I’m sure the second Grok in the human centipede will find that very nutritious.

If you use that Grok, you’ll be third in the centipede. Enjoy.

If it’s so advanced, it should be able to reason out that all human knowledge is standing in the shoulders of others and how errors have prompted us to explore other areas and learn things we never would have otherwise.

Don’t feed the trolls.

[My] translation: “I want to rewrite history to what I want”.

That was my first impression, but then it shifted into “I want my AI to be the shittiest of them all”.

Why not both?

That seems incorrect to me, fucking doofus.

Not sure if has been said already. Fuck musk.

Elon Musk, like most pseudo intellectuals, has a very shallow understanding of things. Human knowledge is full of holes, and they cannot simply be resolved through logic, which Mush the dweeb imagines.

Uh, just a thought. Please pardon, I’m not an Elon shill, I just think your argument phrasing is off.

How would you know there are holes in understanding, without logic. How would you remedy gaps of understanding in human knowledge, without the application of logic to find things are consistent?

You have to have data to apply your logic too.

If it is raining, the sidewalk is wet. Does that mean if the sidewalk is wet, that it is raining?

There are domains of human knowledge that we will never have data on. There’s no logical way for me to 100% determine what was in Abraham Lincoln’s pockets on the day he was shot.

When you read real academic texts, you’ll notice that there is always the “this suggests that,” “we can speculate that,” etc etc. The real world is not straight math and binary logic. The closest fields to that might be physics and chemistry to a lesser extent, but even then - theoretical physics must be backed by experimentation and data.

Thanks I’ve never heard of data. And I’ve never read an academic text either. Condescending pos

So, while I’m ironing out your logic for you, “what else would you rely on, if not logic, to prove or disprove and ascertain knowledge about gaps?”

You asked a question, I gave an answer. I’m not sure where you get “condescending” there. I was assuming you had read an academic text, so I was hoping that you might have seen those patterns before.

You would look at the data for gaps, as my answer explained. You could use logic to predict some gaps, but not all gaps would be predictable. Mendeleev was able to use logic and patterns in the periodic table to predict the existence of germanium and other elements, which data confirmed, but you could not logically derive the existence of protons, electrons and neutrons without the later experimentations of say, JJ Thompson and Rutherford.

You can’t just feed the sum of human knowledge into a computer and expect it to know everything. You can’t predict “unknown unknowns” with logic.

He’s been frustrated by the fact that he can’t make Wikipedia ‘tell the truth’ for years. This will be his attempt to replace it.

There are thousands of backups of wikipedia, and you can download the entire thing legally, for free.

He’ll never be rid of it.

Wikipedia may even outlive humanity, ever so slightly.

Yes

Seconds after the last human being dies, the Wikipedia page is updated to read:

And then 30 seconds after that it’ll get reverted because the edit contains primary sources.

I never would have thought it possible that a person could be so full of themselves to say something like that

An interesting thought experiment: I think he’s full of shit, you think he’s full of himself. Maybe there’s a “theory of everything” here somewhere. E = shit squared?

He is a little shit, he’s full of shit, ergo he’s full of himself

Lol wtf

Unironically Orwellian

I’m just seeing bakes in the lies.

I remember when I learned what corpus meant too

The thing that annoys me most is that there have been studies done on LLMs where, when trained on subsets of output, it produces increasingly noisier output.

Sources (unordered):

Whatever nonsense Muskrat is spewing, it is factually incorrect. He won’t be able to successfully retrain any model on generated content. At least, not an LLM if he wants a successful product. If anything, he will be producing a model that is heavily trained on censored datasets.

.

It’s not so simple, there are papers on zero data ‘self play’ or other schemes for using other LLM’s output.

Distillation is probably the only one you’d want for a pretrain, specifically.

No it doesn’t.

if you won’t tell my truth I’ll force you to acknowledge my truth.

nothing says abusive asshole more than this.

Is he still carrying his little human shield around with him everywhere or can someone Luigi this fucker already?

Yes please do that Elon, please poison grok with garbage until full model collapse.

“Deleting Errors” should sound alarm bells in your head.

And the adding missing information doesn’t. Isn’t that just saying we are going to make shit up.

Instead, he should improve humanity by dying. So much easier and everyone would be so much happier.

I read about this in a popular book by some guy named Orwell

Wasn’t he the children’s author who published the book about a talking animals learning the value of hard work or something?

The very one!

That’d be esteemed British author Georgie Orrell, author of such whimsical classics as “Now the Animals Are Running The Farm!”, “My Big Day Out At Wigan Pier” and, of course, “Winston’s Zany Eighties Adventure”.

By the way, when you refuse to band together, organize, and dispose of these people, they entrench themselves further in power. Everyone ignored Kari Lake as a harmless kook and she just destroyed Voice of America. That loudmouthed MAGA asshole in your neighborhood is going to commit a murder.

What the fuck? This is so unhinged. Genuine question, is he actually this dumb or he’s just saying complete bullshit to boost stock prices?

my guess is yes.

Fuck Elon Musk

Yes! We should all wholeheartedly support this GREAT INNOVATION! There is NOTHING THAT COULD GO WRONG, so this will be an excellent step to PERMANENTLY PERFECT this WONDERFUL AI.

Huh. I’m not sure if he’s understood the alignment problem quite right.

remember when grok called e*on and t**mp a nazi? good times

Dude wants to do a lot of things and fails to accomplish what he says he’s doing to do or ends up half-assing it. So let him take Grok and run it right into the ground like an autopiloted Cybertruck rolling over into a flame trench of an exploding Startship rocket still on the pad shooting flames out of tunnels made by the Boring Company.

Lol turns out elon has no fucking idea about how llms work

It’s pretty obvious where the white genocide “bug” came from.

So they’re just going to fill it with Hitler’s world view, got it.

Typical and expected.

I mean, this is the same guy who said we’d be living on Mars in 2025.

In a sense, he’s right. I miss good old Earth.

He knows more … about knowledge… than… anyone alive now

So just making shit up.

Don’t forget the retraining on the made up shit part!

“and then on retrain on that”

Thats called model collapse.

“We’ll fix the knowledge base by adding missing information and deleting errors - which only an AI trained on the fixed knowledge base could do.”

Spoiler: He’s gonna fix the “missing” information with MISinformation.

She sounds Hot

She’s unfortunately can’t see you because of financial difficulties. You gotta give her money like I do. One day, I will see her in person.

I wonder how many papers he’s read since ChatGPT released about how bad it is to train AI on AI output.

Humm…this doesn’t sound great

Delusional and grasping for attention.

Grandiose delusions from a ketamine-rotted brain.