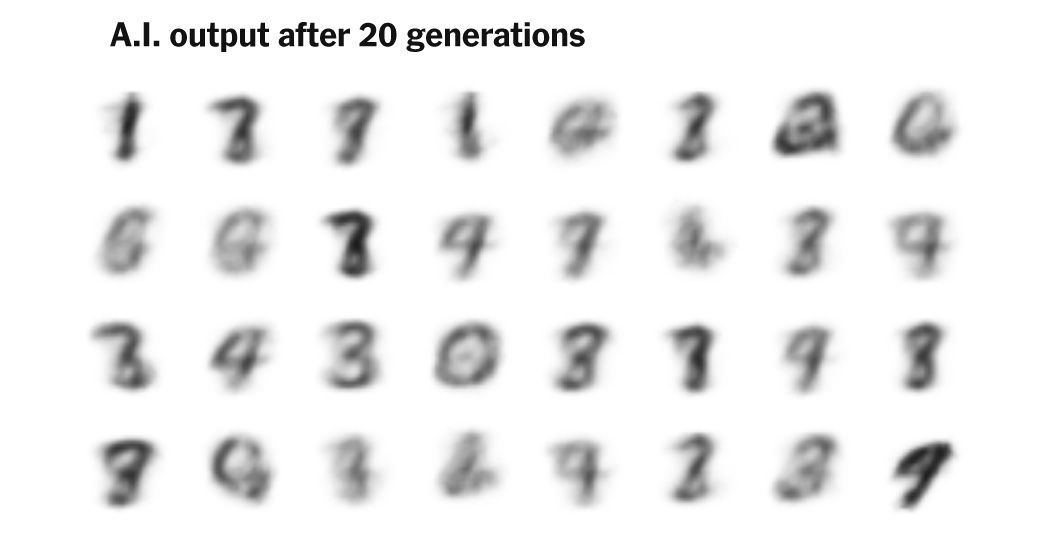

When A.I.’s Output Is a Threat to A.I. Itself | As A.I.-generated data becomes harder to detect, it’s increasingly likely to be ingested by future A.I., leading to worse results.

(www.nytimes.com)

from silence7@slrpnk.net to technology@lemmy.world on 26 Aug 2024 17:41

https://slrpnk.net/post/12742031

from silence7@slrpnk.net to technology@lemmy.world on 26 Aug 2024 17:41

https://slrpnk.net/post/12742031

threaded - newest

My takeaway from this is:

.htmfiles on my webserver.444and closing the connection, return a random.htmof AI-generated slop (instead of serving the real content)I might just do this. It would be fun to write a quick python script to automate this so that it keeps going forever. Just have a link that regens junk then have it go to another junk html file forever more.

Also send this junk to Reddit comments to poison that data too because fuck Spez?

there’s a something that edits your comments after 2 weeks to random words like “sparkle blue fish to be redacted by redactior-program.com” or something

That’s a little different than what I mean.

I mean to run a single bot from a script which interacts a normal human amount during normal human times within a configurable time zone which is acting as a real person just to poison their dataset.

I mean you can just not use the platform…

Yes I’m already doing that.

This is a great idea, I might create a Laravel package to automatically do this.

QUICK

Someone create a github project that does this

It’s the AI analogue of confirmation bias.

Inbreeding

What are you doing step-AI?

Are you serious? Right in front of my local SLM?

Photocopy of a photocopy.

All according to keikaku.

[TL note: keikaku means plan]

No don’t listen to them!

Keikaku means cake! (Muffin to be precise, because we got the muffin button!)

So kinda like the human centipede, but with LLMs? The LLMillipede? The AI Centipede? The Enshittipede?

Except it just goes in a circle.

))<>((

AI centipede. Fucking fantastic.

Imo this is not a bad thing.

All the big LLM players are staunchly against regulation; this is one of the outcomes of that. So, by all means, please continue building an ouroboros of nonsense. It’ll only make the regulations that eventually get applied to ML stricter and more incisive.

They call this scenario the Habsburg Singularity

So now LLM makers actually have to sanitize their datasets? The horror…

I don’t think that’s tractable.

Oh no, it’s very difficult, especially on the scale of LLMs.

That said, we others (those of us who have any amount of respect towards ourselves, our craft, and our fellow human) have been sourcing our data carefully since way before NNs, such as asking the relevant authority for it (ex. asking the post house for images of handwritten destinations).

Is this slow and cumbersome? Oh yes. But it delays the need for over-restrictive laws, just like with RC crafts before drones. And by extension, it allows those who could not source the material they needed through conventional means, or those small new startups with no idea what they were doing, to skim the gray border and still get a small and hopefully usable dataset.

And now, someone had the grand idea to not only scour and scavenge the whole internet with no abandon, but also boast about it. So now everyone gets punished.

At last: don’t get me wrong, laws are good (duh), but less restrictive or incomplete laws can be nice as long as everyone respects each other. I’m excited to see what the future brings in this regard, but I hate the idea that those who facilitated this change likely are the only ones to go free.

that first L stands for large. sanitizing something of this size is not hard, it’s functionally impossible.

You don’t have to sanitize the weights, you have to sanitize the data you use to get the weights. Two very different things, and while I agree that sanitizing a LLM after training is close to impossible, sanitizing the data you give it is much, much easier.

They can’t.

They went public too fast chasing quick profits and now the well is too poisoned to train new models with up to date information.

I always thought this is why the Facebooks and Googles of the world are hoovering up the data now

This reminds me of the low-background steel problem: en.m.wikipedia.org/wiki/Low-background_steel

Interesting read, thanks for sharing.

idk how to get a link to other communities but (Lemmy) r/wikipedia would like this

You link to communities like this: !wikipedia@lemmy.world

!tf2shitposterclub@lemmy.world

oo it worked! ty!

we already have open source AI. This will only effect people trying to make it better than what stable diffusion can do, make a new type of ai entirely (like music, but that’s not a very ai saturated market yet), or update existing ai with new information like skibidi toilet

Provides a wonderful avenue for poisoning the well of big techs notorious asset scrapers.

The best analogy I can think of:

Imagine you speak English, and your dropped off in the middle of the Siberian forest. No internet, old days. Nobody around you knows English. Nobody you can talk to knows English. English for all intents purposes only exists in your head.

How long do you think you could still speak English correctly? 10 years? 20 years? 30 years? Will your children be able to speak English? What about your grandchildren? At what point will your island of English diverge sufficiently from your home English that they’re unintelligible to each other.

I think this is the training problem in a nutshell.

Good. Let the monster eat itself.

Looks like i need some glasses

How many times is this same article going to be written? Model collapse from synthetic data is not a concern at any scale when human data is in the mix. We have entire series of models now trained with mostly synthetic data: huggingface.co/docs/transformers/main/…/phi3. When using entirely unassisted outputs error accumulates with each generation but this isn’t a concern in any real scenarios.

As the number of articles about this exact subject increases, so does the likelihood of AI only being able to write about this very subject.

If AI feedback starts going the other way around we should be REALLY scared. Imagine it just become sentient and superintelligent and read all that we are saying about it.

This is completely unrelated.

Besides, how does AI suddenly become sentient?

It was a joke.

LLMs are as close to real AI as Eliza was (i.e., nowhere even remotely close).

Well then, here’s an idea for all those starving artists: start a business that makes AND sells human-made art/data to AI companies. Video yourself drawing the rare Pepe or Wojak from scratch as proof.

Anyone old enough to have played with a photocopier as a kid could have told you this was going to happen.

Blinks slowly

But, but, I have a photocopier now…

So then you know what happens when you make a copy of a copy of a copy and so on. Same thing with LLMs.

Hahahahaha

AI doing to job of poisoning itself

Maybe this will become a major driver for the improvement of AI watermarking and detection techniques. If AI companies want to continue sucking up the whole internet to train their models on, they’ll have to be able to filter out the AI-generated content.

“filter out” is an arms race, and watermarking has very real limitations when it comes to textual content.

I’m interested in this but not very familiar. Are the limitations to do with brittleness (not surviving minor edits) and the need for text to be long enough for statistical effects to become visible?

Yes — also non-native speakers of a language tend to follow similar word choice patterns as LLMs, which creates a whole set of false positives on detection.