from kernelle@0d.gs to technology@lemmy.world on 27 Jun 13:09

https://0d.gs/post/7264097

TLDR: Testing some CDNs to reveal Vercel and GitHub Pages as the fastest/most reliable for static solutions and Cloudflare for a self-hosted origin.

The Problem

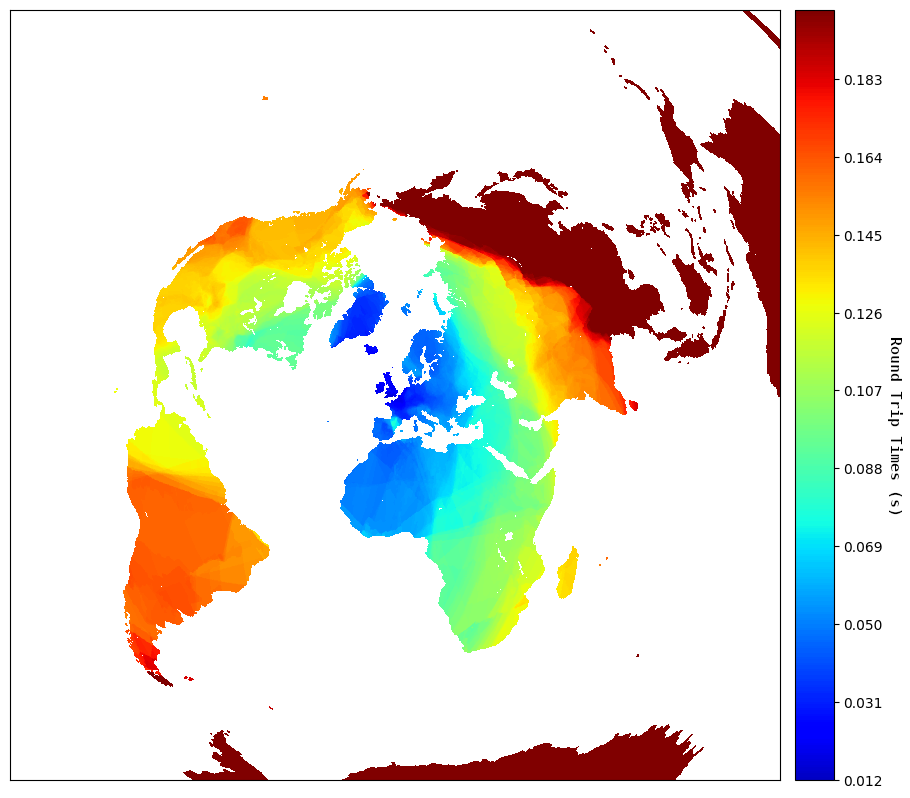

In my previous post, I achieved loadtimes in Europe of under 100ms. But getting those speeds worldwide is a geographical hurdle. My preferred hosting location has always been London because of its proximity to the intercontinental submarine fiber optic network providing some of the best connectivity worldwide.

But it’s a single server, in a single location. From the heatmap we can see the significant fall-off in response times past the 2500km line, such that a visitor from Tokyo has to wait around 8 times longer than their European counterparts.

Free Web

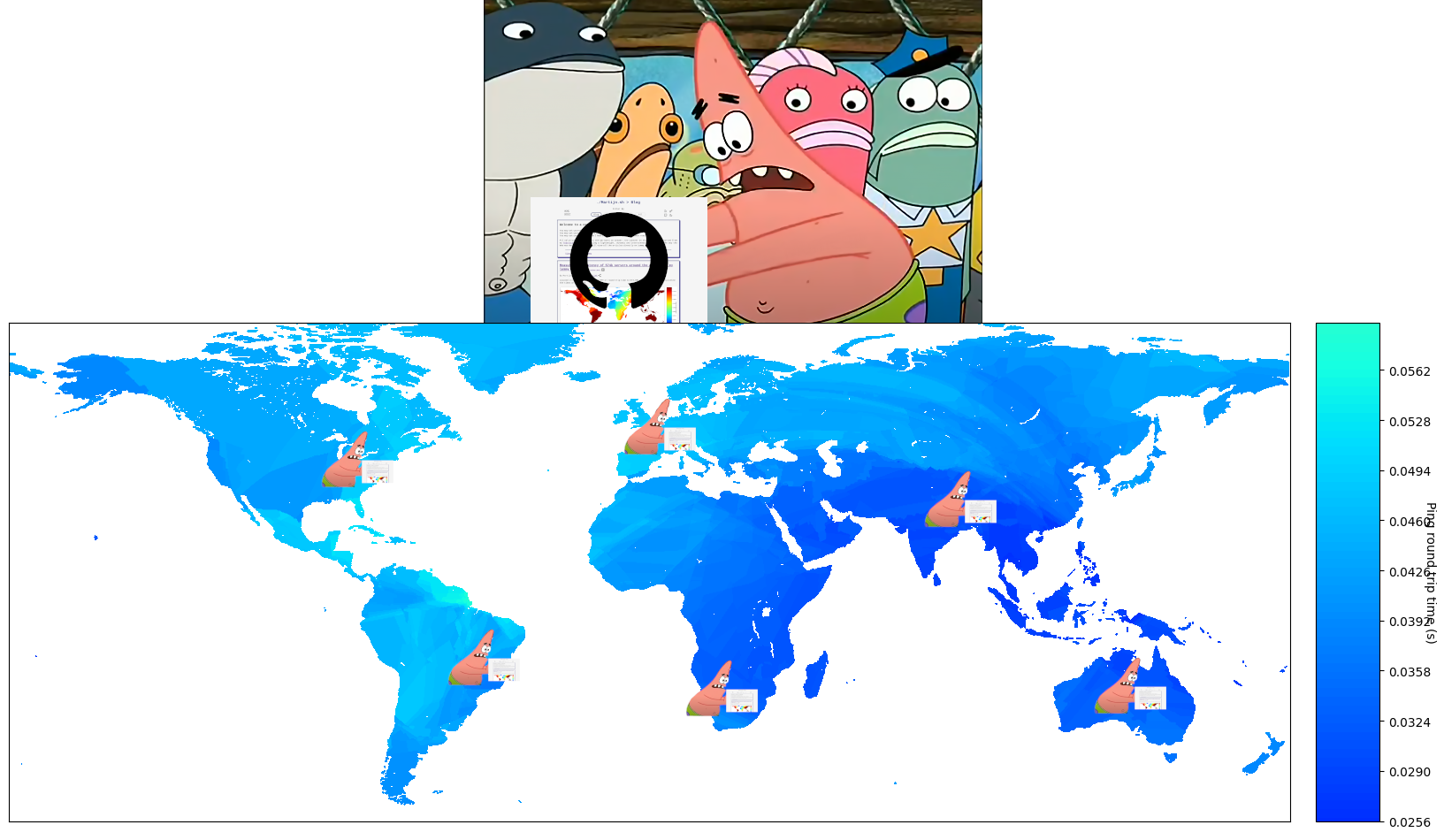

The answer is obvious, a Content Delivery Network or CDN distributes a website in a decentralised fashion, always delivering from the closest server to the user, drastically reducing loadtimes.

I could be renting servers on every continent and make my own CDN, with blackjack and … never mind! Instead, I’ll be using existing and free infrastructure. While the internet is forever changing to accommodate stock markets, many companies, organisations, and individuals still offer professional grade services for free. We cannot understate the value of having such accessible and professional tools ready for experimenting, exploring, and learning with no barrier of entry.

These constraints do not provide an answer to how good CDNs are, but rather how much resources they allocate to their free tiers.

Pull vs Push CDN

A Pull CDN has to request the data from an origin server, whereas a Push CDN pre-emptively distributes code from a repository worldwide.

For our static one-pager a Push CDN is a dream, because they deploy your repository to distribute it worldwide in an instant and keep it there. Pull CDNs also store a cached version but needs our origin server just as frequently. The first visitor in a particular area might be significantly slower if a closer server has not yet cached from the origin. This doesn’t mean Push CDNs can’t be used for complex websites with extensive back-ends, but it adds complexity, optimization, and cost to the project.

Map: Measuring round trip time to my site when using a push CDN

Edge vs Regional vs Origin

CDNs cache in different ways, at different times, using different methods. But all describe Edge Nodes - literally the edge of the network for the fastest delivery, and Regional Nodes when Edge Nodes or “Points of Presences” need to be updated.

Origin Nodes are usually only used with Pull CDNs so the network knows what content to serve when no cache is available, so an Edge Node asks the Regional Node what the Origin has to serve. Unfortunately, that means a CDN without a minimum amount of resources will be slower than not using one at all.

Where cache is stored with Push CDNs also depends on the provider but often use a repository that automatically updates the entire network with a new version. Meaning they can cache much more aggressively and efficiently across the network, resulting in faster loadtimes.

Testing

I know, I know, you’re here for numbers, so let me start with some: 3 rounds of testing 6 continents 98 times each for a combined total of 588 requests spread over 34 days. Every test cycle consists of one https request per continent, using the GlobalPing network and a simple script I’ve written to interface with their CLI-tool.

Different times between requests will provide us with insights on how much resources are allocated for us regular users. We’re looking for the CDN that’s not only the fastest but also the most consistent in its loadtimes.

Included is a demonstration of a badly configured CDN, actually just chaining two CDNs. Without the two platforms talking to each other, the network gets confused and it more than doubles the loadtimes.

Finally, I’ve included ioRiver - the only platform I’ve found with Geo-Routing on a free trial. This would allow me to serve Europe with my own server and the rest of the world with an actual CDN. The first 2 testing rounds, I configured ioRiver to only serve with Vercel for a baseline test on how much, if any, delay they add. In the 3rd round, ioRiver routed Europe to my server, and the rest of the world to Vercel.

Results

We should be expecting my self-hosted solution to the deliver the same speeds every round, this is our baseline. Any CDN with a slower average than my single Self-Hosted solution is not worth considering.

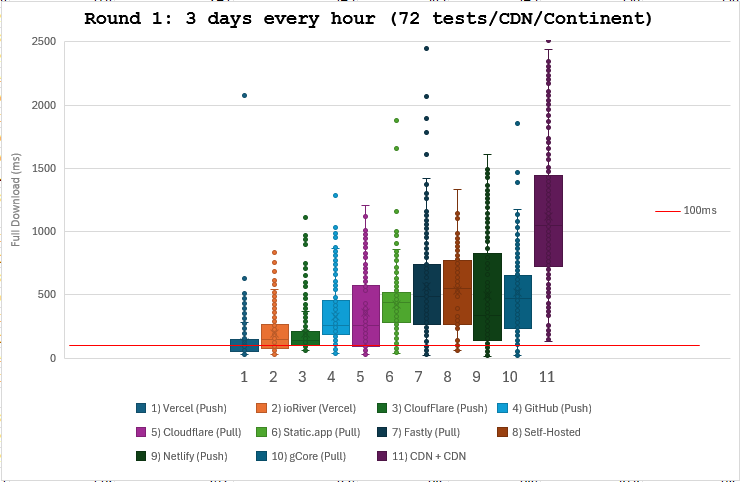

- Round 1: 3 days of requesting once every hour (72 tests)

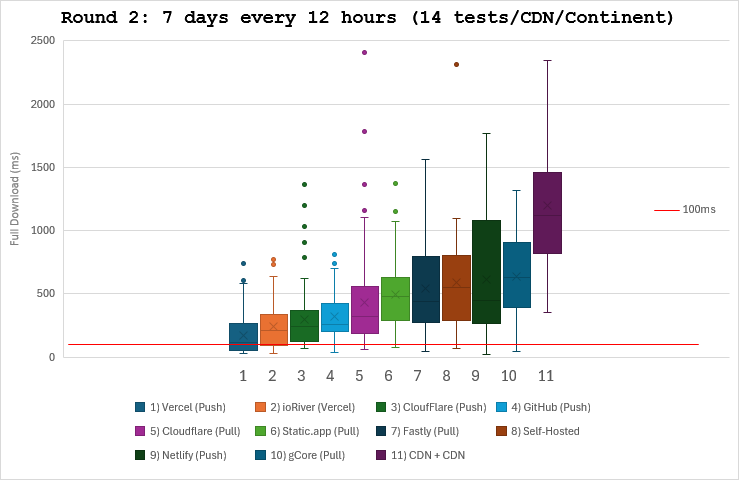

- Round 2: 7 days of requesting once every 12 hours (14 tests)

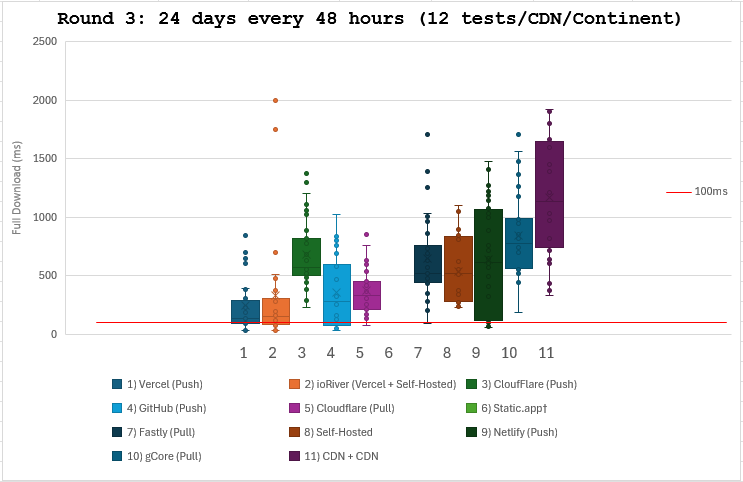

- Round 3: 24 days of requesting once every 48 hours (12 tests)

Round 1 - data

Frequent requests to a CDN ensures the website is cached not only in faster memory spaces but also to a more diverse spread of edge servers.

- Pull CDNs show their strong advantage by not needing an extra request to my server

- ioRiver (Multi-CDN) is setup to only serve Vercel, we can see it adds a considerable delay

- Vercel, GitHub Pages, and Cloudflare (Pull) show themselves to be early leaders

Round 2 - data

Most reflective of a regular day on the site (all charts are ordered by this round)

- Some CDNs already reflect slightly slower times due to not being cached as frequently

- GitHub stands out to me in this round of testing being a little more stable than the previous round

Round 3 - data

†I didn’t take Static.app’s 30 day trial into account when testing, which is why it’s absent from this final round.

- Surprisingly enough for Cloudflare we notice their Pull version pulling ahead of their Push CDN

- Adding my Self-Hosted solution to ioRiver’s Multi-CDN via Geo-Routing to Europe shows it can genuinely add stability and decrease loadtimes

Notes

It’s pretty clear by now Vercel is throwing more money at the problem, so it shouldn’t come as a surprise they’ve set a limit on the monthly requests: a respectable 1 million or 100GB total per month. For evaluation, I’ve changed my website to start hosting from Vercel.

GitHub’s limits are described as a soft bandwidth limit of 100GB/month, more like a gentleman’s agreement.

Same as last time, I’ll be leaving up the different deployments for another month probably.

- martijn.sh (Vercel - for now)

- vercel.martijn.sh

ioriver.martijn.sh- cf.martijn.sh (Cloudflare Push)

- git.martijn.sh

- cdn.martijn.sh (Cloudflare Pull)

staticapp.martijn.sh- fastly.martijn.sh

- eu.martijn.sh (Self-Hosted)

- netlify.martijn.sh

- gcore.martijn.sh

cdncdn.martijn.sh

Code / Scripts on GitHub

These scripts are provided as is. I’ve made them partly configurable with CLI, but there are also hard-coded changes required if you’re planning on using them yourself.

CDN Test

ping.py

Interface with GlobalPing’s CLI tool, it completes a https request for every subdomain or different deployment from every continent equally with many rate limiting functions. In hindsight, interfacing with their API might’ve been a better use of time…

parseGlobalPing.py

Parses all files generated by GlobalPing during ping.py, calculate averages, and returns this data in pretty print or CSV (I’m partial to a good spreadsheet…). Easy to tweak with CLI arguments.

CDN Testing Round

Ping every 12h from every continent (hardcoded domains & time) $ python3 ping.py -f official_12h -l 100 Parse, calculate, and pretty print all pings $ python3 parseGlobalPings.py -f official_12h

Heatmap

masscan - discovery

masscan 0.0.0.0/4 -p80 --output-format=json --output-filename=Replies.json --rate 10000

Scans a portion of the internet for servers with an open port 80, traditionally used for serving a website or redirect.

hpingIps.sh - measurement

Due to masscan not recording RTT’s, I used hping for the measurements. Nmap is a good choice as well but hping is slightly faster. I’ve found MassMap after my scan, which wraps Masscan and Nmap nicely together. I’ll update this when I’ve compared its speed compared to my implementation.

This is a quick and dirty script to use hping and send one packet to port 80 of any host discovered by masscan.

query.py - parse and locate

Primary and original function is to query the GeoLite2 database with an IP-address to give a rough estimate of their physical location to plot a heatmap. Now it can also estimate the distance between my server and another using Haversine.

plot.py

Creates heatmap with the output of query.py (longitude, latitude, and RTT) using Matplotlib

query.py and plot.py are forked from Ping The World! by Erik Bernhardsson, but is over 10 years old. The new scripts fixed many issues and are much improved.

Graph plot

Masscan (Command mentioned above) # Replies.json Masscan -> IPList $ python3 query.py --masscan > IPList.txt IPList -> RTT # sh hpingIps.sh RTT -> Combinedlog $ python3 query.py > log_combined.txt CombinedLog -> Plot $ python3 plot.py

./Martijn.sh > Blog / How I made a blog using Lemmy / Measuring the latency of 574k servers around the world from my lemmy server

threaded - newest

Very interesting read. Thanks for that.

Nice to hear! I’m glad you enjoyed it.