Scientific Journals Are Publishing Papers With AI-Generated Text

(www.404media.co)

from Stopthatgirl7@lemmy.world to technology@lemmy.world on 19 Mar 2024 03:47

https://lemmy.world/post/13286289

from Stopthatgirl7@lemmy.world to technology@lemmy.world on 19 Mar 2024 03:47

https://lemmy.world/post/13286289

The ChatGPT phrase “As of my last knowledge update” appears in several papers published by academic journals.

threaded - newest

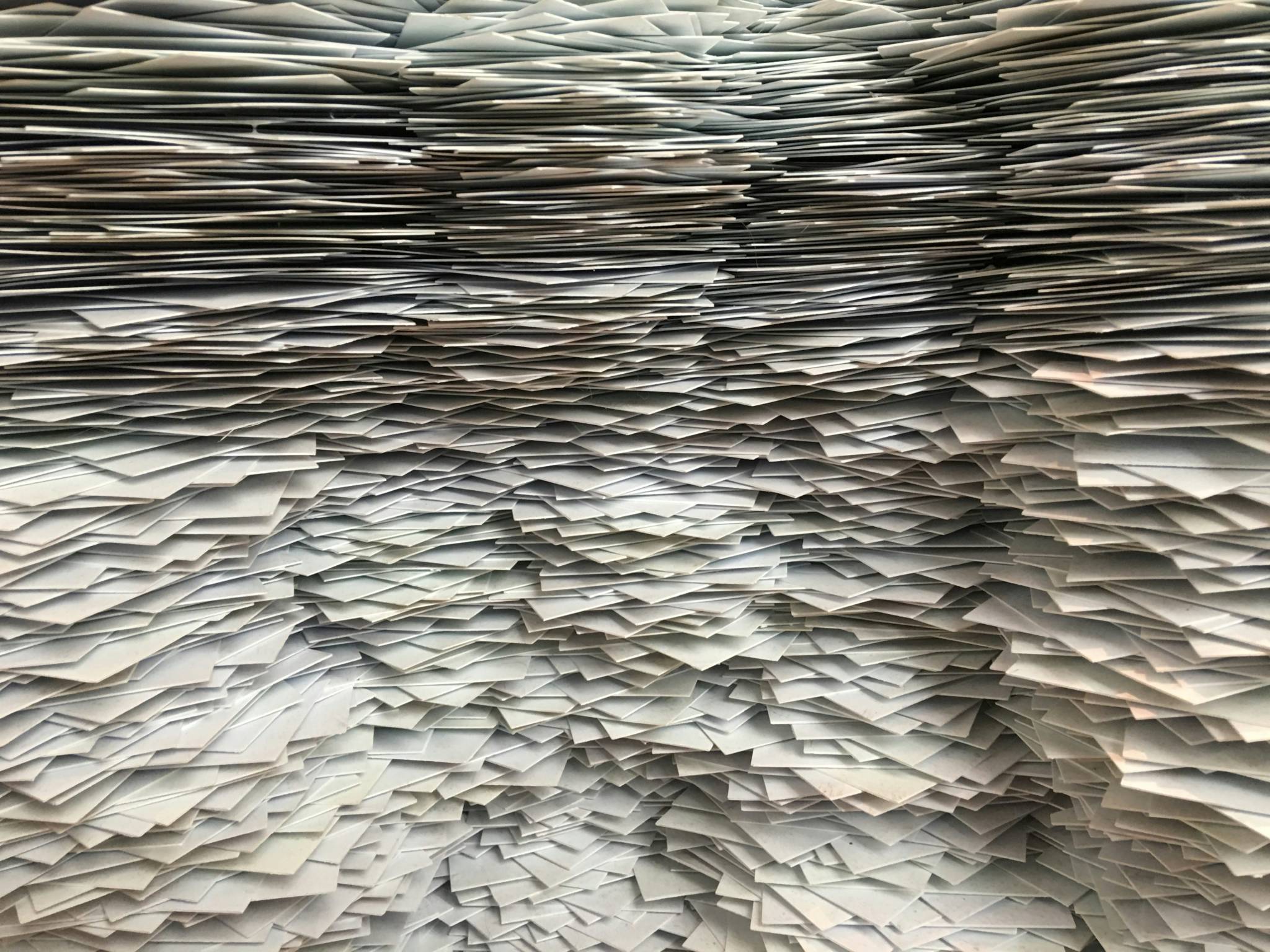

This really just shines a light on a more significant underlying problem with scientific publication in general, that being that there's just way too much of it. "Publish or perish" is resulting in enormous pressure to churn out papers whether they're good or not.

As an outsider with a researcher PhD in the family, I suspect its an issue of how the institutions measure success. How many papers? How many cites? Other metrics might work, but probably not as broadly. I assume they will also care about the size of your staff, how much grant money you get, patents held, etc.

I suspect that, short of a Nobel prize, it is difficult to objectively measure how one is advancing scientific progress (without a PhD committee to evaluate it.)

Yeah, that’s my sense too, as someone within low-level academia. Bibliometrics and other attempts to quantify research output have been big in the last few decades, but I think that they have made the problem worse if anything.

It’s especially messy when we consider the kind of progress and contribution that Nobel prizes can’t account for, like education and outreach. I really like how Dr Fatima explores this in her video How Science Pretends to be Meritocratic(duration: 37:04)

Here is an alternative Piped link(s):

How Science Pretends to be Meritocratic

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

The saying "when a measure becomes a target it ceases to be a good measure" (Goodhart's Law) has been making the rounds online recently, this is a good example of that.

Ironically, this is a common problem faced when training AIs too.

So I couldn’t find a membership-free version of this article, and not considering to sign up for another website, so I’m commenting on what I can see.Edit: I signed up with 10 minute mail, it’s an okay article.I did the same search on Google Scholar, and it gave me 188 results. A good chunk of it are actually legitimate papers that discuss ChatGPT / AI capabilities and quoted responses from it. Still, a lot of papers that have nothing to do with machine learning have the same text in it, which I’m both surprised and not surprised.

As FaceDeer pointed out, the amount of papers schools have to churn out each year is astounding, and there are bound to be unremarkable ones. Most of them are, actually. When something becomes a chore, people will find an easier way to get through it. I won’t be surprised if there were actually more papers that use ChatGPT to generate parts of it that didn’t have the quote, students already do that with Wikipedia for their homework before ChatGPT was even a thing, this is just a better version of it. To be fair, it is a powerful tool that aggregates information with a single line of text, and most of the time its reliable. Most of the time. That’s why you have to do your own research and verify its validity afterwards. I have used Microsofts Copilot, and while I do like that it gives me sources, it sometimes still gives me stuff that the original source did not say.

What I am surprised about is that, the professor, institute, or even the publisher didn’t even think to do the basic amount of verification, and let something so blatantly obvious slip through. Some of the quotes appear right at the beginning of a paragraph, which is just laughable.

I’m going to start giving presentations at work this way.

Papers are supposed to be peer reviewed by an independent program committee…

It’s like a Sokal Hoax for STEM.

The scientific community is a mess. We need to be able to rely on journals and authors to have an understanding of the world. At this point we need to reverse course hard. Anyone who authors a paper like this is banned. Any journal who publishes one is banned. Any reviewer who approves one is banned. Any professor who approves one is sanctioned (they aren’t reviewers after all) depending on the number they’ve let slip through. P-hacking and similar issues are dealt with appropriately, again scaling with frequency and magnitude of offense. Restrict the p-value threshold by lowering it from 0.05 to 0.01. Encourage replication and null-results. Abolish or severely reduce journal fees. Provide incentives for replication and review. Etc.

Heavy on the “peer”, light on the “review”