Very, Very Few People Are Falling Down the YouTube Rabbit Hole | The site’s crackdown on radicalization seems to have worked. But the world will never know what was happening before that

(www.theatlantic.com)

from L4s@lemmy.world to technology@lemmy.world on 01 Sep 2023 22:00

https://lemmy.world/post/4275438

from L4s@lemmy.world to technology@lemmy.world on 01 Sep 2023 22:00

https://lemmy.world/post/4275438

Very, Very Few People Are Falling Down the YouTube Rabbit Hole | The site’s crackdown on radicalization seems to have worked. But the world will never know what was happening before that::The site’s crackdown on radicalization seems to have worked. But the world will never know what was happening before that.

threaded - newest

Requesting a paywall circumvention.

Done. Check my comment.

The article below:

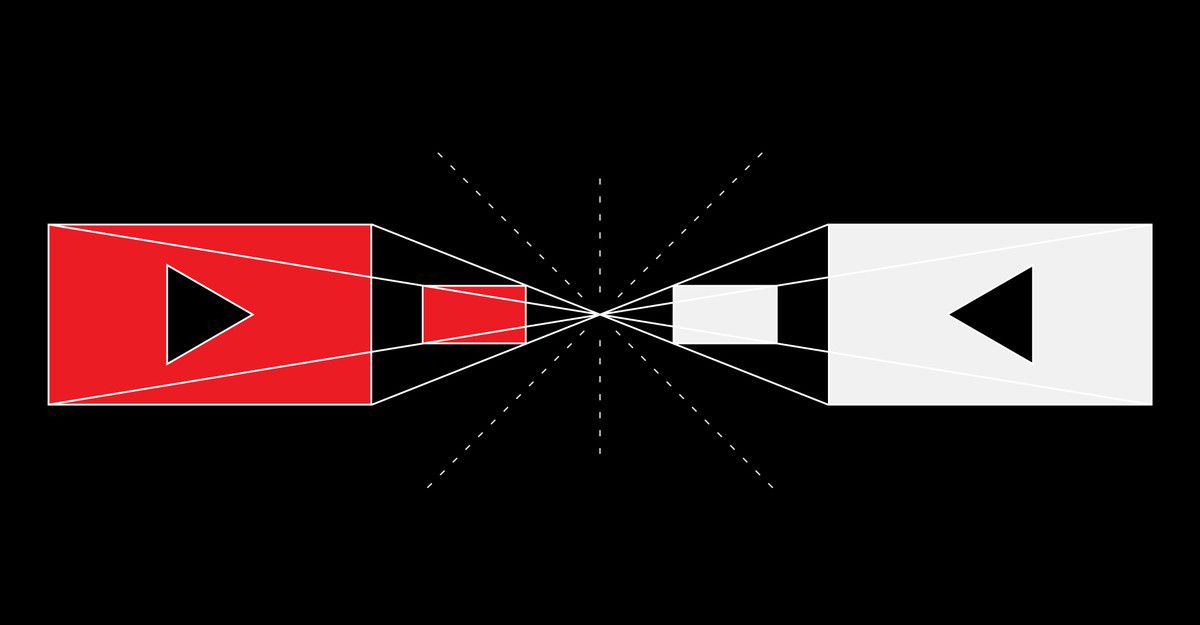

Around the time of the 2016 election, YouTube became known as a home to the rising alt-right and to massively popular conspiracy theorists. The Google-owned site had more than 1 billion users and was playing host to charismatic personalities who had developed intimate relationships with their audiences, potentially making it a powerful vector for political influence. At the time, Alex Jones’s channel, Infowars, had more than 2 million subscribers. And YouTube’s recommendation algorithm, which accounted for the majority of what people watched on the platform, looked to be pulling people deeper and deeper into dangerous delusions.

The process of “falling down the rabbit hole” was memorably illustrated by personal accounts of people who had ended up on strange paths into the dark heart of the platform, where they were intrigued and then convinced by extremist rhetoric—an interest in critiques of feminism could lead to men’s rights and then white supremacy and then calls for violence. Most troubling is that a person who was not necessarily looking for extreme content could end up watching it because the algorithm noticed a whisper of something in their previous choices. It could exacerbate a person’s worst impulses and take them to a place they wouldn’t have chosen, but would have trouble getting out of.

Just how big a rabbit-hole problem YouTube had wasn’t quite clear, and the company denied it had one at all even as it was making changes to address the criticisms. In early 2019, YouTube announced tweaks to its recommendation system with the goal of dramatically reducing the promotion of “harmful misinformation” and “borderline content” (the kinds of videos that were almost extreme enough to remove, but not quite). At the same time, it also went on a demonetizing spree, blocking shared-ad-revenue programs for YouTube creators who disobeyed its policies about hate speech.Whatever else YouTube continued to allow on its site, the idea was that the rabbit hole would be filled in.

A new peer-reviewed study, published today in Science Advances, suggests that YouTube’s 2019 update worked. The research team was led by Brendan Nyhan, a government professor at Dartmouth who studies polarization in the context of the internet. Nyhan and his co-authors surveyed 1,181 people about their existing political attitudes and then used a custom browser extension to monitor all of their YouTube activity and recommendations for a period of several months at the end of 2020. It found that extremist videos were watched by only 6 percent of participants. Of those people, the majority had deliberately subscribed to at least one extremist channel, meaning that they hadn’t been pushed there by the algorithm. Further, these people were often coming to extremist videos from external links instead of from within YouTube.

These viewing patterns showed no evidence of a rabbit-hole process as it’s typically imagined: Rather than naive users suddenly and unwittingly finding themselves funneled toward hateful content, “we see people with very high levels of gender and racial resentment seeking this content out,” Nyhan told me. That people are primarily viewing extremist content through subscriptions and external links is something “only [this team has] been able to capture, because of the method,” says Manoel Horta Ribeiro, a researcher at the Swiss Federal Institute of Technology Lausanne who wasn’t involved in the study. Whereas many previous studies of the YouTube rabbit hole have had to use bots to simulate the experience of navigating YouTube’s recommendations—by clicking mindlessly on the next suggested video over and over and over—this is the first that obtained such granular data on real, human behavior.

The study does have an unavoidable flaw: It cannot account for anything that happened on YouTube before the data were collected, in 2020. “It may be the case that the susceptible population was already radicalized during YouTube’s pre-2019 era,” as Nyhan and his co-authors explain in the paper. Extremist content does still exist on YouTube, after all, and some people do still watch it. So there’s a chicken-and-egg dilemma: Which came first, the extremist who watches videos on YouTube, or the YouTuber who encounters extremist content there?

Examining today’s YouTube to try to understand the YouTube of several years ago is, to deploy another metaphor, “a little bit ‘apples and oranges,’” Jonas Kaiser, a researcher at Harvard’s Berkman Klein Center for Internet and Society who wasn’t involved in the study, told me. Though he considers it a solid study, he said he also recognizes the difficulty of learning much about a platform’s past by looking at one sample of users from its present. This was also a significant issue with a collection of new studies about Facebook’s role in political polarization,

Another way to put that study’s weakness, in scientific terms, is that there’s no control group against which the studied group is being compared. There’s zero indication that the 2019 changes had any effect at all, without some data from before those changes.

Always love when people try to hold social sciences to the same standard as physical sciences

The subtitle of the article is “The site’s crackdown on radicalization seems to have worked. But the world will never know what was happening before that.”

How do you draw a conclusion about trends based on data collected from one period of time?

I’ve never heard of Rumble before, apparently it’s a video platform and the company that owns Truth social, so it’s very popular with the far right

Honestly don’t mean this as an attack, but couldn’t people just clicked on the link, if they really wanted to read the article?

It is paywalled and someone explicitly requested it in the comments.

Fair enough. Thanks for sharing it (didn’t realize it was paywalled).

Bro people were eating tidepods and we saw a resurgence of nazism and white nationlism.

I think we at least know the effects of what was happening before.

Could we convince the Nazis to eat the tide pods?

Just get 4chan to convince the imbeciles that it’s a white supremacist symbol like they did with the okay sign.

You got that turned around. 4chan convinced politicians/pundits the ok symbol was white supremacist. Honestly, it worked, but they should have picked the shocker. Would have actually been funny.

Exactly, the imbeciles. Which is even more funny considering the original intention was to convince liberals and other lefties that the ok symbol was racist just for the hell of it, but it backfired and only braindead nazis and fascists ended up believing it.

Maybe it was a response to “Trap” suddenly becoming transphobic? That’s not a hill that’s worth dying on, but it seems like overnight that term became problematic.

Anyway, neo-nazis love hidden symbols. Even if they aren’t hidden at all.

4Chan is an early adopter of memes. Unpopular memes tend to go through 4Chan and fizzle. Popular ones go through 4chan and the get big. I don’t know the causal relationship.

The 👌 sign is still a white power movement sign, even if its used by youths and politicians trying to get down with the core.

I’d hazard a guess right wing superiority groups are epidemic with imposter syndrome, with Grand Wizards and Three-Percenter lieutenants doubting their validity more than eggs and questioning gays. Heck, Donald Trump, former President of the United States is like the god emperor of imposter syndrome.

.

Only if you want it to. For 99.99% of the population the okay sign is still the okay sign.

It’s a Poe’s Law kind of situation. Some people were saying dumb things about the OK sign because they were pretending to be idiots. Actual idiots see it and start doing it because they’re actual white supremacists doing a sign they heard about from the internet to indicate their affiliation.

…something something… making your whites whiter… they’ll get the message they’re after

apparently there weren’t really any people eating tide pods

I’m pretty sure more people did it after it blew up in the ‘news’ than ever did it before that point.

Wait what? Maybe I’m misunderstanding, but this is what I got out of the article:

“We had anecdotes and preliminary evidence of a phenomenon. A robust scientific study showed no evidence of said phenomenon. Therefore, the phenomenon was previously real but has now stopped.”

That seems like really, really bad science. Or at least, really really bad science reporting. Like, if anecdotes are all it takes, here’s one from just a few weeks ago.

I left some Andrew Tate-esque stuff running overnight by accident and ended up having to delete my watch history to get my homepage back to how it was before.

From the quoted bit it sounds like there was credible science that found nothing. That doesn’t mean there is nothing, but just that they found nothing.

What a load of dog dung that article is. Justifying censorship, labelling everything that is not liked by some politicial “expert” as far right extreme.

Censorship is when a platform refuses to host my lies. Because the platform is infringing on my right to checks notes force them to publish my content.

Censorship of online content is good, but simultaneously the censorship of sexually explicit books in elementary schools is evil.

Neat.

.

When an algorithm is involved, things change. These aren’t static websites that only get passed around by real people. This is some bizarre pseudo intelligence that thinks if you like WWII history and bratwurst, that you’d also like neo-nazi content. That’s not an exaggeration. One of my very left leaning friends started getting neo-nazi videos suggested to him and I suspect it was for those reasons.

Also, youtube isn’t a free speech platform. It’s an advertisement platform. Fediverse is a free speech platform, although it’s free speech for the person paying the hosting bills.

Recently watched a documentary called ‘the YouTube effect’ by Alex Winter (bill of bill and ted) which goes into how YouTube was essential in the current global state of radicalized individuals.

In the earlyish days of the internet (late 1990s / early 00s) I fell deep down the rabbit hole of right wing hate and conspiracy theories…

One subject of the doc explains his descent. It is almost exactly mine. Only these days it is hyper stimulated, laser targeted, data driven, psychological warfare, wrapped in polished, billionaire backed campaigns.

It comes at you from wherever you are.

Crypto bros. Health/hydro bros. incel bros. Christian bros. Muslim bros. Rogan bros. Peterson bros. Elon bros. Tech bros. Anon bros. etc.

By the time a lot of people realize what’s happened, if ever, they’re already in too deep.

Hmm I’m sensing a theme here…

Loneliness, lack of purpose, which then gets fulfilled by a relatively small community driven by defending and idea, ideology and/or an individual.

Yup, it’s the same old song of fascists and cultists.

“Are you lonely, sad, angry, or just generally dissatisfied with your life? Have you tried blaming your problems on a minority with less power in society than yourself? Act now, and I’ll throw in a second minority, free!*”

*Just pay shipping and handling.

Interestingly, those are also very common among people showing signs of conspiratorial thinking.

I think I fell in the other YouTube rabbit hole? My recs are all progressive podcasters, history video essays, and YouTube creator podcasts that complain about YouTube?

💀

humblebrag energy

Leftists aren’t immune. My YouTube has a lot of Vaush, Hasan, Sam Seder, etc.

Though I do also get Patrick Bet David and PragerU thrown in too, I think because I can’t help but watch for a window into their line of thinking.

It is not quite the same. It recommends things you watch. If you watch Hasan you tend to get more Hasan stuff but only on rare occasions do you get Vaush stuff.

Back in the day you could watch one non political thunderfoot about some scam and the recommendations would be a rouges gallery of anti-sjws with no other recommendations.

Now you can get radicalized because you want to be and it’s a nice saunter down the hill. Then it was a sheer cliff you could accidentally fall into. If you didn’t experience it you can’t really imagine how stupid it was.

There’s a recent documentary movie about that called Bros I suggest you check it out.

Bro! I don’t know if there’s a theme, bro! But, bro, I’ll look into it, bro.

Bro.

Broooooo, that’s too much bro for any one bro to have to look into, bro.

I like to watch videos of media critiques. Somehow all the ones that I keep getting recommended are anti woke d bags that blame every bad movie choice on the company/producer/director/etc going woke. I’ve pretty much had to stop watching those types of videos and try to rebalance the algorithm by watching literally anything that seems remotely left leaning. It’s been 2 months and it’s barely better.

Patrick H. Willems. Thomas Flight. Now You See It. Cinema Therapy. Pop Culture Detective. jstoobs

Pop Culture Detective is great, for the most part.

Yeah a lot of channels used to be actual discussion now it’s all culture war bs.

I have resorted to extreme methods of recommendation algorithm avoidance such as, but not limited to:

Multiple accounts.

Proxies and anonymous services like startpage.com anonymous view.

Non YouTube clients like piped or envidious.

Using multiple browsers instead of a single google spyware app.

Yeah I’m just watching less because I don’t want to spend the time doing that. It’s irritating either way but at least this way I can justify since I’m not putting in and time.

There’s one channel I love watching for the behind the scenes news on movie studios, but then it goes anti-woke so hard sometimes that I literally just have to stop the video mid-viewing.

The anti-woke crowd think the woke crowd are so annoying, but they never themselves stop to think about how the anti-woke croud can be so annoying as well.

What do you mean by ‘it coming at you’ and ‘being too deep’?

I recommend you watch this video to answer your question youtu.be/P55t6eryY3g?si=TPMvx5YmF0XANLXB

Here is an alternative Piped link(s): piped.video/P55t6eryY3g?si=TPMvx5YmF0XANLXB

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source, check me out at GitHub.

Not OP but I’m guessing the algorithms that recommend videos have gone down one direction, so you’re in an echo chamber where it seems like that is everything there is. You’d never hear a counter-argument; only ever one side of the argument.

Whatever your interest or hobby, there is a psyop devoted to it. Wherever you are, whatever you do, whatever you’re curious about, you will find targeted propaganda.

Because of the methods used. The conspiracies wrapped in a cozy blanket of semi truth and emotional manipulation make it easy to fall prey to.

If you’re angry, it will make you angrier. Violent, even. If you’re happy, it can make you hate with the loving joy of false religious zeal. If you are confused and uncertain, it will provide the esoteric truths you seek, with the absolute certainty of a “final solution”

Etc

And it’s difficult to unwind.

We need more liberal critical thinking bros.

Funny how the left doesn’t have any bros.

We’ve got brothers.

I was aware of this study when they presented it virtually (can’t remember where), and while I don’t have an issue with their approach and results, I’m more concerned about the implications of these numbers. The few percent that were exposed to extremist content may seen small. But scaling that up to population level, personally that is worrisome to me … The impact of the few very very bad apples can still catastrophic.

Why do we need to know what happened before? A record of the past is just material radicals can use to radicalize others.

Don’t worry, they know how to radicalize people just fine.

Weird. Youtube keeps recommending right wing videos even though I’ve purged them from my watch history and always selected Not Interested. It got to the point that I installed a 3rd party channel blocker.

I don’t even watch too many left leaning political videos and even those are just tangentially political.

I’ve been watching tutorials on jump ropes and kickboxing. I do watch YouTube shorts, but lately I’m being shown Andrew Tate stuff. I didn’t skip it quick enough, now 10% of the things I see are right leaning bot created contents. Slowly gun related, self defense, and Minecraft are taking over my YouTube shorts.

If you don’t already, you can view your watch history and delete things.

I do that with anything not music related, and it keeps my recommendations extremely clean.

Kickboxing to Andrew Tate is unfortunately a short jump for the algorithm to make, I guess

I like a few Minecraft channels, but I only watch it in private tabs because I know yt will flood my account with it if I’m not careful. There is no middle ground with The Algorithm.

Yeah it’s too much skewed by recent viewing. Even if you’re subscribed to X amount of channels about topic Y but you just watched one video on topic Z, then say goodbye to Y, you only like Z now.

I know everyone likes to be conspiracy on this but it’s really just trying to get your attention any way possible. There’s more right wing popular political videos, so the algorithm is more likely to suggest them. These videos also get lots of views so again, more likely to be suggested.

Just ignore them and watch what you like

I’ve already said I installed a channel blocker to deal with the problem, but it’s still annoying that a computer has me in their database as liking right wing shit. If it was limited to just youtube recommendations, it would be nothing, but we’re on a slow burn to a dystopian hell. Google has no reason not to use their personality profile of me elsewhere.

I made this comment elsewhere, but I have a very liberal friend who’s German, likes German food, and is into wwii era history. Facebook was suggesting neo-nazi groups to him.

I watch a little flashgitz and now I’m being recommended FreedomToons. I get that’s some people that like flashgitz are going to be terrible, but I shouldn’t have to click Not Interested more then once.

What are you using to block channel’s ?

addons.mozilla.org/en-US/firefox/…/blocktube/

I’m sure YouTube hangs on to that data even if you delete the history. I would guess that since you don’t watch left wing videos much their algorithm still thinks you are politically right of center? Although I would have expected it to just give up recommending political channels altogether at some point. I hardly ever get recommendations for political stuff, and right wing content is the minority of that

I watch some left wing stuff, but I prefer my politics to be in text form. Too much dramatic music and manipulative editing even in things I agree with. The algorithm should see me as center left if anything, but because I watch some redneck engineering videos(that I ditch if they do get political), it seems to think I should also like transphobic videos.

i think if you like economics or fast cars you will also get radical right wing talk videos. if you like guns it’s even worse.

Nah. Cars or money has nothing to do with it. I’ve never once gotten any political bullshit and those two topics are 60% of what I watch.

i made a fresh google account specifically to watch daily streams from one stocks channel (the guy is a liberal) and i got cars, guns, right wing politics in the feed.

my general use account suggestion feed is mostly camera gear, leftist video essays and debate bro drama.

Uncle Bruce?

GET OUT OF MY HOUSE

Bargoooooons!!

I started to get into atheist programs and within a month I was getting targeted ads trying to convert me.

I started getting into Motorsport recently. I just get the ID video essay on racing and videos similar to top gear like overdrive. I don’t get any right wing stuff or guns. But I’m also in the UK so it probably uses that too. For American maybe it’s like “ah other Americans that line fast cars also like guns, here you go”

Oh, you like WW2 documentaries about how liberal democracy crushed fascism strategically, industrially, scientifically and morally?

Well you might enjoy these videos made by actual Nazis complaining about gender neutral bathrooms!

Indicating “not interested” shows engagement on your part. Therefore the algorithm provides you with more content like that so that you will engage more.

You can try blocking the channel, which has mixed results for the same reason, or closing youtube and staying away from it for a few hours on that account.

If I click Not Interested increases the likely hood of getting more of the same, then all the more reason to run ad blockers.

The Channel Blocker is a 3rd party tool. It just hides the channel from view. Google shouldn’t know I’m doing it.

I don’t know if this is accurate or not, but it’s the most nonsensical thing I’ve heard in a while. If engaging with something to say, “I don’t want to see this,” results in more of that content - the user will eventually leave the platform. I’m having this concern right now with my Google feed. I keep clicking not interested, yet continue getting similar content. Consequently, I’m increasingly leaning toward disabling the functionality because I’m tired of fucking seeing shit I don’t care to see. Getting angry just thinking about it.

I can only offer my own experience as evidence, but this is what I was advised to do (stop engaging by not selecting anything) and it worked. Prior to that I kept getting tons of stuff that I didn’t want to see, but it stopped within a few days once I stopped engaging with it. And I agree, it is infuriating.

Because I got this advice from someone else, I guess it has worked for others too.

.

I think your home IP affects recommendations.

.

I’m hopefully wrong because it might have GDPR implications, but on the other hand they’re probably using an LLm and once theyre on the back of that tiger they cnt let go of the tail.

Yeah I didn’t even know who he was until a few months ago. Yet he is the top channel.

YouTube/Google knows who you are and has a profile of you and your interests no matter what you do.

You have to truly obfuscate your identity to escape it.

By getting butthurt from seeing objectionable content it is still interaction and the algorithm links it to you. Your likes and dislikes are both part of your identity and they know that and use it.

Because interaction with the website is all that matters, happy or angry they don’t care.

In fact, they probably prefer you to be butthurt because you are more engaged.

Now if we could just purge this website of HexBear morons.

Can always go to one of the many instances that defederated them. Not like there’s account-wide upvote points to lose or anything. (genuine suggestion)

If they’re using an app, they should check if their app supports blocking instances

There are many instances that have defederated them that you could join. Or if you’re really serious you could host your own.

Weirdly, YouTube’s algo propelled me down the Pinko-commie anarcho-socialist boy-we-suck-at-democracy rabbit hole. I was an avid BreadTuber long before I ever heard the name BreadTube.

Yeah, it started for me during Covid when I felt like I needed long-form podcasts/streamers in the background for noise while working from home. I think my progression was from The Worst Year Ever -> Chapo Trap House -> It Could Happen Here -> PhilosphyTube -> Contrapoints -> Vaush. TBF, I’ve been a leftist before Youtube existed, probably starting with Chomsky, Einstein’s article, and random pirated documentaries.

Never had this problem, never used YT while logged on. Just incognito and careful search keywords. If the algo recommended something sus, immediately close your incognito session and open a new one.

.

I HAVE THE SHINEYIST MEAT BYCYCLE

I see 3 times the same headline. õ.Ô

I have read it 3 times and still didn’t understood what it is about.

Now it’s 4. They multiply!

Very, Very Few People Are Falling Down the YouTube Rabbit Hole | The site’s crackdown on radicalization seems to have worked. But the world will never know what was happening before that::The site’s crackdown on radicalization seems to have worked. But the world will never know what was happening before that.

.

Thank you Big Brother for protecting me.

All my YouTube recommendations went downhill about 3 years ago. I am bombarded by rightwing Christian stuff no matter how many times I flag and complain.

I’m bombarded by Joe Rogan stuff. I keep blocking the channels but there is an endless stream of them

I always downvote, then block the channel whenever I get those. However, I think the mere act of going to the button to block the channel instead of just scrolling on immediately is telling the algorithm that I want more of that kind of video.

Watching a bit of breadtube stuff, I feel like thr algorithm can’t determine what video is against stuff like that and what’s for, so I get recommended videos for whatever I don’t like instead of against.

that might actually be the issue. YouTube doesn’t differentiate between upvotes and downvotes really. to the algorithm you’re engaging in the content either way so it’s serving you more to keep you engaged.

I am also suspecting that downvoting or blocking is somehow interpreted as “engaged with the content so lets shove more of it”

I keep getting ‘rescue’ animal videos which involve people purposely putting puppies and kittens in destressing situations so they can ‘save them’ its sick and no matter how often i block and report those videos they re-appear next month. I also get alot of ‘police shooting people’ videos which i also try to block

I think it’s just a matter of fine tuning your preferences. I’ve never an irrelevant video recommended to me within the last few years. All the recommendations have been great. Retro hardware reviews, video game gameplay guides, science videos, and other informational/engineering stuff.

Who did these stats, I’m getting more right wing proganda than ever. Also Facebook is just as bad as ever. I really like stuff like the fediverse since I can control my feed.

Me, too. I’m always recommended Joe Rogan or Jordan Peterson videos, with a sprinkling of Ben Shapiro. I even got someone claiming the holocaust was overblown (i reported them). All within the past few months.

I don’t get recommended regular videos like that, but youtube shorts are full of that garbage. I suspect it’s a blind spot

because tiktok replaced yotube in that regard

If it’s true that they have closed the radicalization rabbit hole then that is a huge achievement and very very good news.

Now that they’ve entrenched an entire alternative universe in an election-winning proportion of the population, they don’t need it anymore.

Unless YouTube is going to be deliberately directing people to deprogramming content it’s too late.

A lot of damage is done, certainly, but I think any success they have will depend on keeping up this bullshit. New voters are growing up all the time. The less chance for them to fall down the QAnon conspiracy after they just wanted to find some video game guide content, the better.